Overview¶

The Vitis vision library has been designed to work in the Vitis development environment, and provides a software interface for computer vision functions accelerated on an FPGA device. Vitis vision library functions are mostly similar in functionality to their OpenCV equivalent. Any deviations, if present, are documented.

See also

For more information on the Vitis vision library please refer Prerequisites section

To familiarize yourself with the steps required to use the Vitis vision library functions, see the Using the Vitis vision Library.

Basic Features¶

All Vitis vision library functions follow a common format. The following properties hold true for all the functions.

- All the functions are designed as templates and all arguments that

are images, must be provided as

xf::cv::Mat. - All functions are defined in the

xf::cvnamespace. - Some of the major template arguments are:

- Maximum size of the image to be processed

- Datatype defining the properties of each pixel

- Number of pixels to be processed per clock cycle

- Other compile-time arguments relevent to the functionality.

The Vitis vision library contains enumerated datatypes which enables you to

configure xf::cv::Mat. For more details on xf::cv::Mat, see the xf::cv::Mat

Image Container Class.

Vitis Vision Kernel on Vitis¶

The Vitis vision library is designed to be used with the Vitis development environment.

The OpenCL host code is written in the testbench file, whereas the calls to Vitis

Vision functions are done from the accel file.

The image containers for Vitis vision library functions are xf::cv::Mat

objects. For more information, see the xf::cv::Mat Image Container

Class.

Vitis Vision Library Contents¶

The following table lists the contents of the Vitis vision library.

| Folder | Details |

|---|---|

| L1/examples | Contains the sample testbench code to facilitate running unit tests on Vitis/Vivado HLS. The examples/ has folders with algorithm names. Each algorithm folder contains testbench, accel, config, Makefile , Json file and a ‘build’ folder. |

| L1/include/aie | Contains the infrastructure headers and AIE kernel definitions |

| L1/include/common | Contains the common library infrastructure headers, such as types specific to the library. |

| L1/include/core | Contains the core library

functionality headers, such as

the math functions. |

| L1/include/features | Contains the feature extraction

kernel function definitions. For

example, Harris. |

| L1/include/imgproc | Contains all the kernel function definitions related to image proce ssing definitions. |

| L1/include/video | Contains all the kernel function definitions, related to video proc essing functions.eg:Optical flow |

| L1/include/dnn | Contains all the kernel function definitions, related to deep lea rning preprocessing. |

| L1/tests | Contains all test folders to run simulations, synthesis and export RTL.The tests folder contains the folders with algorithm names.Each algorithm folder further contains configuration folders, that has makefile and tcl files to run tests. |

| L1/examples/build | Contains xf_config_params.h file, which has configurable macros and varibales related to the particula r example. |

| L1/lib/sw | Contains the AIE data-movers library object files |

| L2/examples | Contains the sample testbench code to facilitate running unit tests on Vitis. The examples/ contains the folders with algorithm names. Each algorithm folder contains testbench, accel, config, Makefile , Json file and a ‘build’ folder. |

| L2/tests | Contains all test folders to run software, hardware emulations and hardware build. The tests cont ains folders with algorithm names. Each algorithm folder further cont ains configuration folders, that has makefile and config files to run PL tests. |

| L2/tests/aie | Contains all test folders to run x86 simulation, hardware emulation and hardware build. The tests cont ains folders with algorithm names. Each algorithm folder further cont ains configuration folders, that has makefile, testbench, config and other required files to run the AIE tests |

| L2/examples/build | Contains xf_config_params.h file, which has configurable macros and varibales related to the particula r example. |

| L3/examples | Contains the sample testbench code to build pipeline functions on Vitis. The examples/ contains the folders with algorithm names. Each algorithm folder contains testbench, accel, config, Makefile , Json file and a ‘build’ folder. |

| L3/tests | Contains all test folders to run software, hardware emulations and hardware build.The tests cont ains folders with algorithm names. Each algorithm name folder contai ns the configuration folders, inside configuration folders makefile is present to run tests. |

| L3/examples/build | Contains xf_config_params.h file, which has configurable macros and varibales related to the particula r example. |

| L3/benchmarks | Contains benchmark examples to compare the software implementation versus FPGA implementation using Vitis vision library. |

| ext | Contains the utility functions related to opencl hostcode. |

Getting Started with Vitis Vision¶

Describes the methodology to create a kernel, corresponding host code and a suitable makefile to compile an Vitis Vision kernel for any of the supported platforms in Vitis. The subsequent section also explains the methodology to verify the kernel in various emulation modes and on the hardware.

Prerequisites¶

- Valid installation of Vitis™ 2022.1 or later version and the corresponding licenses.

- Download the Vitis Vision library from github.

- Install the card for which the platform is supported in Vitis 2022.1 or later versions.

- If targeting an embedded platform, downlaod the platform, common images and set up the evaluation board.

- Xilinx® Runtime (XRT) must be installed. XRT provides software interface to Xilinx FPGAs.

- Install/compile OpenCV libraries(with compatible libjpeg.so). Appropriate version (X86/aarch32/aarch64) of compiler must be used based on the available processor for the target board.

- libOpenCL.so must be installed if not present along with the platform.

Note

All Vitis Vision functions were tested against OpenCV version - 4.4.0

Vitis Design Methodology¶

There are three critical components in making a kernel work on a platform using Vitis™:

- Host code with OpenCL constructs

- Wrappers around HLS Kernel(s)

- Makefile to compile the kernel for emulation or running on hardware.

Host Code with OpenCL¶

Host code is compiled for the host machine that runs on the host and provides the data and control signals to the attached hardware with the FPGA. The host code is written using OpenCL constructs and provides capabilities for setting up, and running a kernel on the FPGA. The following functions are executed using the host code:

- Loading the kernel binary on the FPGA – xcl::import_binary_file() loads the bitstream and programs the FPGA to enable required processing of data.

- Setting up memory buffers for data transfer – Data needs to be sent and read from the DDR memory on the hardware. cl::Buffers are created to allocate required memory for transferring data to and from the hardware.

- Transfer data to and from the hardware –enqueueWriteBuffer() and enqueueReadBuffer() are used to transfer the data to and from the hardware at the required time.

- Execute kernel on the FPGA – There are functions to execute kernels on the FPGA. There can be single kernel execution or multiple kernel execution that could be asynchronous or synchronous with each other. Commonly used command is enqueueTask().

- Profiling the performance of kernel execution – The host code in OpenCL also enables measurement of the execution time of a kernel on the FPGA. The function used in our examples for profiling is getProfilingInfo().

Wrappers around HLS Kernel(s)¶

All Vitis Vision kernels are provided with C++ function templates (located at <Github repo>/include) with image containers as objects of xf::cv::Mat class. In addition, these kernels will work either in stream based (where complete image is read continuously) or memory mapped (where image data access is in blocks).

Vitis flow (OpenCL) requires kernel interfaces to be memory pointers with width in power(s) of 2. So glue logic is required for converting memory pointers to xf::cv::Mat class data type and vice-versa when interacting with Vitis Vision kernel(s). Wrapper(s) are build over the kernel(s) with this glue logic. Below examples will provide a methodology to handle different kernel (Vitis Vision kernels located at <Github repo>/include) types (stream and memory mapped).

Stream Based Kernels¶

To facilitate the conversion of pointer to xf::Mat and vice versa, two adapter functions are included as part of Vitis Vision xf::cv::Array2xfMat() and xf::cv::xfMat2Array(). It is necessary for the xf::Mat objects to be invoked as streams using HLS pragma with a minimum depth of 2. This results in a top-level (or wrapper) function for the kernel as shown below:

extern “C”

{

void func_top (ap_uint *gmem_in, ap_uint *gmem_out, ...) {

xf::cv::Mat<…> in_mat(…), out_mat(…);

#pragma HLS dataflow

xf::cv::Array2xfMat<…> (gmem_in, in_mat);

xf::cv::Vitis Vision-func<…> (in_mat, out_mat…);

xf::cv::xfMat2Array<…> (gmem_out, out_mat);

}

}

The above illustration assumes that the data in xf::cv::Mat is being streamed in and streamed out. You can also create a pipeline with multiple functions in pipeline instead of just one Vitis Vision function.

For the stream based kernels with different inputs of different sizes, multiple instances of the adapter functions are necessary. For this,

extern “C” {

void func_top (ap_uint *gmem_in1, ap_uint *gmem_in2, ap_uint *gmem_in3, ap_uint *gmem_out, ...) {

xf::cv::Mat<...,HEIGHT,WIDTH,…> in_mat1(…), out_mat(…);

xf::cv::Mat<...,HEIGHT/4,WIDTH,…> in_mat2(…), in_mat3(…);

#pragma HLS dataflow

xf::cv::accel_utils obj_a, obj_b;

obj_a.Array2xfMat<…,HEIGHT,WIDTH,…> (gmem_in1, in_mat1);

obj_b.Array2xfMat<…,HEIGHT/4,WIDTH,…> (gmem_in2, in_mat2);

obj_b.Array2xfMat<…,HEIGHT/4,WIDTH,…> (gmem_in3, in_mat3);

xf::cv::Vitis-Vision-func(in_mat1, in_mat2, int_mat3, out_mat…);

xf::cv::xfMat2Array<…> (gmem_out, out_mat);

}

}

For the stream based implementations, the data must be fetched from the input AXI and must be pushed to xfMat as required by the xfcv kernels for that particular configuration. Likewise, the same operations must be performed for the output of the xfcv kernel. To perform this, two utility functions are provided, xf::cv::Array2xfMat() and xf::cv::xfMat2Array().

Array2xfMat¶

This function converts the input array to xf::cv::Mat. The Vitis Vision kernel would require the input to be of type, xf::cv::Mat. This function would read from the array pointer and write into xf::cv::Mat based on the particular configuration (bit-depth, channels, pixel-parallelism) the xf::cv::Mat was created. Array2xfMat supports line stride. Line stride is the number of pixels which needs to be added to the address in the first pixel of a row in order to access the first pixel of the next row.

//Without Line stride support

template <int PTR_WIDTH, int MAT_T, int ROWS, int COLS, int NPC>

void Array2xfMat(ap_uint< PTR_WIDTH > *srcPtr, xf::cv::Mat<MAT_T,ROWS,COLS,NPC,XFCVDEPTH>& dstMat)

//With Line stride support

template <int PTR_WIDTH, int MAT_T, int ROWS, int COLS, int NPC>

void Array2xfMat(ap_uint< PTR_WIDTH > *srcPtr, xf::cv::Mat<MAT_T,ROWS,COLS,NPC,XFCVDEPTH>& dstMat, int stride)

| Parameter | Description |

|---|---|

| PTR_WIDTH | Data width of the input pointer. The value must be power 2, starting from 8 to 512. |

| MAT_T | Input Mat type. Example XF_8UC1, XF_16UC1, XF_8UC3 and XF_8UC4 |

| ROWS | Maximum height of image |

| COLS | Maximum width of image |

| NPC | Number of pixels computed in parallel. Example XF_NPPC1, XF_NPPC8 |

| XFCVDEPTH | Depth of the Output image. |

| srcPtr | Input pointer. Type of the pointer based on the PTR_WIDTH. |

| dstMat | Output image of type xf::cv::Mat |

| stride | Line stride. Default value is dstMat.cols |

xfMat2Array¶

This function converts the input xf::cv::Mat to output array. The output of the xf::kernel function will be xf::cv::Mat, and it will require to convert that to output pointer. xfMat2Array supports line stride. Line stride is the number of pixels which needs to be added to the address in the first pixel of a row in order to access the first pixel of the next row.

//Without Line stride support

template <int PTR_WIDTH, int MAT_T, int ROWS, int COLS, int NPC, int XFCVDEPTH = _XFCVDEPTH_DEFAULT, int FILLZERO = 1>

void xfMat2Array(xf::cv::Mat<MAT_T,ROWS,COLS,NPC,XFCVDEPTH>& srcMat, ap_uint< PTR_WIDTH > *dstPtr)

//With Line stride support

template <int PTR_WIDTH, int MAT_T, int ROWS, int COLS, int NPC, int XFCVDEPTH = _XFCVDEPTH_DEFAULT, int FILLZERO = 1>

void xfMat2Array(xf::cv::Mat<MAT_T,ROWS,COLS,NPC,XFCVDEPTH>& srcMat, ap_uint< PTR_WIDTH > *dstPtr, int stride)

| Parameter | Description |

|---|---|

| PTR_WIDTH | Data width of the output pointer. The value must be power 2, from 8 to 512. |

| MAT_T | Input Mat type. Example XF_8UC1, XF_16UC1, XF_8UC3 and XF_8UC4 |

| ROWS | Maximum height of image |

| COLS | Maximum width of image |

| NPC | Number of pixels computed in parallel. Example XF_NPPC1, XF_NPPC8 |

| XFCVDEPTH | Depth of the Input image. |

| FILLZERO | Line padding Flag. Use when line stride support is needed. Default value is 1 |

| dstPtr | Output pointer. Type of the pointer based on the PTR_WIDTH. |

| srcMat | Input image of type xf::cv::Mat |

| stride | Line stride. Default value is srcMat.cols |

Interface pointer widths¶

Minimum pointer widths for different configurations is shown in the following table:

| MAT type | Parallelism | Min PTR_WIDTH | Max PTR_WIDTH |

|---|---|---|---|

| XF_8UC1 | XF_NPPC1 | 8 | 512 |

| XF_16UC1 | XF_NPPC1 | 16 | 512 |

| XF_ 8UC1 | XF_NPPC8 | 64 | 512 |

| XF_ 16UC1 | XF_NPPC8 | 128 | 512 |

| XF_ 8UC3 | XF_NPPC1 | 32 | 512 |

| XF_ 8UC3 | XF_NPPC8 | 256 | 512 |

| XF_8UC4 | XF_NPPC8 | 256 | 512 |

| XF_8UC3 | XF_NPPC16 | 512 | 512 |

Kernel-to-Kernel streaming¶

There are two utility functions available in Vitis Vision, axiStrm2xfMat and xfMat2axiStrm to support streaming of data between two kernels. For more details on kernel-to-kernel streaming, refer to the “Streaming Data Transfers Between the Kernels” section of [UG1393](https://www.xilinx.com/support/documentation/sw_manuals/xilinx2021_1/ug1393-vitis-application-acceleration.pdf) document.

axiStrm2xfMat¶

axiStrm2xfMat is used by consumer kernel to support streaming data transfer between two kernels. Consumer kernel receives data from producer kernel through kernel streaming interface which is defined by hls:stream with the ap_axiu< PTR_WIDTH, 0, 0, 0> data type. axiStrm2xfMat would read from AXI stream and write into xf::cv:Mat based on particular configuration (bit-depth, channels, pixel-parallelism) the xf::cv:Mat was created.

template <int PTR_WIDTH, int MAT_T, int ROWS, int COLS, int NPC>

void axiStrm2xfMat(hls::stream<ap_axiu<PTR_WIDTH, 0, 0, 0> >& srcPtr, xf::cv::Mat<MAT_T, ROWS, COLS, NPC>& dstMat)

| Parameter | Description |

|---|---|

| PTR_WIDTH | Data width of the input pointer. The value must be power 2, starting from 8 to 512. |

| MAT_T | Input Mat type. Example XF_8UC1, XF_16UC1, XF_8UC3 and XF_8UC4 |

| ROWS | Maximum height of image |

| COLS | Maximum width of image |

| NPC | Number of pixels computed in parallel. Example XF_NPPC1, XF_NPPC8 |

| srcPtr | Input image of type hls::stream<ap_axiu<PTR_WIDTH, 0, 0, 0> > |

| dstMat | Output image of type xf::cv::Mat |

xfMat2axiStrm¶

xfMat2axiStrm is used by producer kernel to support streaming data transfer between two kernels. This function converts the input xf:cv::Mat to AXI stream based on particular configuration (bit-depth, channels, pixel-parallelism).

template <int PTR_WIDTH, int MAT_T, int ROWS, int COLS, int NPC>

void xfMat2axiStrm(xf::cv::Mat<MAT_T, ROWS, COLS, NPC>& srcMat, hls::stream<ap_axiu<PTR_WIDTH, 0, 0, 0> >& dstPtr)

| Parameter | Description |

|---|---|

| PTR_WIDTH | Data width of the input pointer. The value must be power 2, starting from 8 to 512. |

| MAT_T | Input Mat type. Example XF_8UC1, XF_16UC1, XF_8UC3 and XF_8UC4 |

| ROWS | Maximum height of image |

| COLS | Maximum width of image |

| NPC | Number of pixels computed in parallel. Example XF_NPPC1, XF_NPPC8 |

| srcPtr | Input image of type hls::stream<ap_axiu<PTR_WIDTH, 0, 0, 0> > |

| dstMat | Output image of type xf::cv::Mat |

Memory Mapped Kernels¶

In the memory map based kernels such as crop, Mean-shift tracking and bounding box, the input read will be for particular block of memory based on the requirement for the algorithm. The streaming interfaces will require the image to be read in raster scan manner, which is not the case for the memory mapped kernels. The methodology to handle this case is as follows:

extern “C”

{

void func_top (ap_uint *gmem_in, ap_uint *gmem_out, ...) {

xf::cv::Mat<…> in_mat(…,gmem_in), out_mat(…,gmem_out);

xf::cv::kernel<…> (in_mat, out_mat…);

}

}

The gmem pointers must be mapped to the xf::cv::Mat objects during the object creation, and then the memory mapped kernels are called with these mats at the interface. It is necessary that the pointer size must be same as the size required for the xf::Vitis-Vision-func, unlike the streaming method where any higher size of the pointers (till 512-bits) are allowed.

Makefile¶

Examples for makefile are provided in the examples and tests section of GitHub.

Design example Using Library on Vitis¶

Following is a multi-kernel example, where different kernel runs sequentially in a pipeline to form an application. This example performs Canny edge detection, where two kernels are involved, Canny and edge tracing. Canny function will take gray-scale image as input and provided the edge information in 3 states (weak edge (1), strong edge (3), and background (0)), which is being fed into edge tracing, which filters out the weak edges. The prior works in a streaming based implementation and the later in a memory mapped manner.

Host code¶

The following is the Host code for the canny edge detection example. The host code sets up the OpenCL platform with the FPGA of processing required data. In the case of Vitis Vision example, the data is an image. Reading and writing of images are enabled using called to functions from Vitis Vision.

// setting up device and platform

std::vector<cl::Device> devices = xcl::get_xil_devices();

cl::Device device = devices[0];

cl::Context context(device);

cl::CommandQueue q(context, device,CL_QUEUE_PROFILING_ENABLE);

std::string device_name = device.getInfo<CL_DEVICE_NAME>();

// Kernel 1: Canny

std::string binaryFile=xcl::find_binary_file(device_name,"krnl_canny");

cl::Program::Binaries bins = xcl::import_binary_file(binaryFile);

devices.resize(1);

cl::Program program(context, devices, bins);

cl::Kernel krnl(program,"canny_accel");

// creating necessary cl buffers for input and output

cl::Buffer imageToDevice(context, CL_MEM_READ_ONLY,(height*width));

cl::Buffer imageFromDevice(context, CL_MEM_WRITE_ONLY,(height*width/4));

// Set the kernel arguments

krnl.setArg(0, imageToDevice);

krnl.setArg(1, imageFromDevice);

krnl.setArg(2, height);

krnl.setArg(3, width);

krnl.setArg(4, low_threshold);

krnl.setArg(5, high_threshold);

// write the input image data from host to device memory

q.enqueueWriteBuffer(imageToDevice, CL_TRUE, 0,(height*(width)),img_gray.data);

// Profiling Objects

cl_ulong start= 0;

cl_ulong end = 0;

double diff_prof = 0.0f;

cl::Event event_sp;

// Launch the kernel

q.enqueueTask(krnl,NULL,&event_sp);

clWaitForEvents(1, (const cl_event*) &event_sp);

// profiling

event_sp.getProfilingInfo(CL_PROFILING_COMMAND_START,&start);

event_sp.getProfilingInfo(CL_PROFILING_COMMAND_END,&end);

diff_prof = end-start;

std::cout<<(diff_prof/1000000)<<"ms"<<std::endl;

// Kernel 2: edge tracing

cl::Kernel krnl2(program,"edgetracing_accel");

cl::Buffer imageFromDeviceedge(context, CL_MEM_WRITE_ONLY,(height*width));

// Set the kernel arguments

krnl2.setArg(0, imageFromDevice);

krnl2.setArg(1, imageFromDeviceedge);

krnl2.setArg(2, height);

krnl2.setArg(3, width);

// Profiling Objects

cl_ulong startedge= 0;

cl_ulong endedge = 0;

double diff_prof_edge = 0.0f;

cl::Event event_sp_edge;

// Launch the kernel

q.enqueueTask(krnl2,NULL,&event_sp_edge);

clWaitForEvents(1, (const cl_event*) &event_sp_edge);

// profiling

event_sp_edge.getProfilingInfo(CL_PROFILING_COMMAND_START,&startedge);

event_sp_edge.getProfilingInfo(CL_PROFILING_COMMAND_END,&endedge);

diff_prof_edge = endedge-startedge;

std::cout<<(diff_prof_edge/1000000)<<"ms"<<std::endl;

//Copying Device result data to Host memory

q.enqueueReadBuffer(imageFromDeviceedge, CL_TRUE, 0,(height*width),out_img_edge.data);

q.finish();

Top level kernel¶

Below is the top-level/wrapper function with all necessary glue logic.

// streaming based kernel

#include "xf_canny_config.h"

extern "C" {

void canny_accel(ap_uint<INPUT_PTR_WIDTH> *img_inp, ap_uint<OUTPUT_PTR_WIDTH> *img_out, int rows, int cols,int low_threshold,int high_threshold)

{

#pragma HLS INTERFACE m_axi port=img_inp offset=slave bundle=gmem1

#pragma HLS INTERFACE m_axi port=img_out offset=slave bundle=gmem2

#pragma HLS INTERFACE s_axilite port=img_inp bundle=control

#pragma HLS INTERFACE s_axilite port=img_out bundle=control

#pragma HLS INTERFACE s_axilite port=rows bundle=control

#pragma HLS INTERFACE s_axilite port=cols bundle=control

#pragma HLS INTERFACE s_axilite port=low_threshold bundle=control

#pragma HLS INTERFACE s_axilite port=high_threshold bundle=control

#pragma HLS INTERFACE s_axilite port=return bundle=control

xf::cv::Mat<XF_8UC1, HEIGHT, WIDTH, INTYPE> in_mat(rows,cols);

xf::cv::Mat<XF_2UC1, HEIGHT, WIDTH, XF_NPPC32> dst_mat(rows,cols);

#pragma HLS DATAFLOW

xf::cv::Array2xfMat<INPUT_PTR_WIDTH,XF_8UC1,HEIGHT,WIDTH,INTYPE>(img_inp,in_mat);

xf::cv::Canny<FILTER_WIDTH,NORM_TYPE,XF_8UC1,XF_2UC1,HEIGHT, WIDTH,INTYPE,XF_NPPC32,XF_USE_URAM>(in_mat,dst_mat,low_threshold,high_threshold);

xf::cv::xfMat2Array<OUTPUT_PTR_WIDTH,XF_2UC1,HEIGHT,WIDTH,XF_NPPC32>(dst_mat,img_out);

}

}

// memory mapped kernel

#include "xf_canny_config.h"

extern "C" {

void edgetracing_accel(ap_uint<INPUT_PTR_WIDTH> *img_inp, ap_uint<OUTPUT_PTR_WIDTH> *img_out, int rows, int cols)

{

#pragma HLS INTERFACE m_axi port=img_inp offset=slave bundle=gmem3

#pragma HLS INTERFACE m_axi port=img_out offset=slave bundle=gmem4

#pragma HLS INTERFACE s_axilite port=img_inp bundle=control

#pragma HLS INTERFACE s_axilite port=img_out bundle=control

#pragma HLS INTERFACE s_axilite port=rows bundle=control

#pragma HLS INTERFACE s_axilite port=cols bundle=control

#pragma HLS INTERFACE s_axilite port=return bundle=control

xf::cv::Mat<XF_2UC1, HEIGHT, WIDTH, XF_NPPC32> _dst1(rows,cols,img_inp);

xf::cv::Mat<XF_8UC1, HEIGHT, WIDTH, XF_NPPC8> _dst2(rows,cols,img_out);

xf::cv::EdgeTracing<XF_2UC1,XF_8UC1,HEIGHT, WIDTH, XF_NPPC32,XF_NPPC8,XF_USE_URAM>(_dst1,_dst2);

}

}

Evaluating the Functionality¶

You can build the kernels and test the functionality through software emulation, hardware emulation, and running directly on a supported hardware with the FPGA. Use the following commands to setup the basic environment:

$ cd <path to the folder where makefile is present>

$ source <path to the Vitis installation folder>/Vitis/<version number>/settings64.sh

$ export DEVICE=<path-to-platform-directory>/<platform>.xpfm

For PCIe devices, set the following:

$ source <path to Xilinx_xrt>/setup.sh

$ export OPENCV_INCLUDE=< path-to-opencv-include-folder >

$ export OPENCV_LIB=< path-to-opencv-lib-folder >

$ export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:< path-to-opencv-lib-folder >

For embedded devices, set the following:

Download the platform, and common-image from Xilinx Download Center. Run the sdk.sh script from the common-image directory to install sysroot using the command :

$ ./sdk.sh -y -d ./ -p

Unzip the rootfs file :

$ gunzip ./rootfs.ext4.gz

$ export SYSROOT=< path-to-platform-sysroot >

Software Emulation¶

Software emulation is equivalent to running a C-simulation of the kernel. The time for compilation is minimal, and is therefore recommended to be the first step in testing the kernel. Following are the steps to build and run for the software emulation:

For PCIe devices:

$ make host xclbin TARGET=sw_emu

$ make run TARGET=sw_emu

For embedded devices:

$ make host xclbin TARGET=sw_emu HOST_ARCH=< aarch32 | aarch64 >

$ make run TARGET=sw_emu HOST_ARCH=< aarch32 | aarch64 >

Hardware Emulation¶

Hardware emulation runs the test on the generated RTL after synthesis of the C/C++ code. The simulation, since being done on RTL requires longer to complete when compared to software emulation. Following are the steps to build and run for the hardware emulation:

For PCIe devices:

$ make host xclbin TARGET=hw_emu

$ make run TARGET=hw_emu

For embedded devices:

$ make host xclbin TARGET=hw_emu HOST_ARCH=< aarch32 | aarch64 >

$ make run TARGET=hw_emu HOST_ARCH=< aarch32 | aarch64 >

Testing on the Hardware¶

To test on the hardware, the kernel must be compiled into a bitstream (building for hardware). This would consume some time since the C/C++ code must be converted to RTL, run through synthesis and implementation process before a bitstream is created. As a prerequisite the drivers has to be installed for corresponding XSA, for which the example was built for. Following are the steps to build the kernel and run on a hardware:

For PCIe devices:

$ make host xclbin TARGET=hw

$ make run TARGET=hw

For embedded devices:

$ make host xclbin TARGET=hw HOST_ARCH=< aarch32 | aarch64 >

$ make run TARGET=< sw_emu|hw_emu|hw > HOST_ARCH=< aarch32 | aarch64 > #This command will generate only the sd_card folder in case of hardware build.

Note. For hw run on embedded devices, copy the generated sd_card folder content under package_hw to an SD Card. More information on preparing the SD Card is available [here](https://xilinx-wiki.atlassian.net/wiki/spaces/A/pages/18842385/How+to+format+SD+card+for+SD+boot#HowtoformatSDcardforSDboot-CopingtheImagestotheNewPartitions). After successful booting of the board, run the following commands:

cd /mnt

export XCL_BINDIR=< xclbin-folder-present-in-the-sd_card > #For example, “export XCL_BINDIR=xclbin_zcu102_base_hw”

./run_script.sh

Using the Vitis vision Library¶

This section describes using the Vitis vision library in the Vitis development environment.

Note: The instructions in this section assume that you have downloaded and installed all the required packages.

include folder constitutes all the necessary components to build a Computer Vision or Image Processing pipeline using the library. The folders common and core contain the infrastructure that the library functions need for basic functions, Mat class, and macros. The library functions are categorized into 4 folders, features, video, dnn, and imgproc based on the operation they perform. The names of the folders are self-explanatory.

To work with the library functions, you need to include the path to the the include folder in the Vitis project. You can include relevant header files for the library functions you will be working with after you source the include folder’s path to the compiler. For example, if you would like to work with Harris Corner Detector and Bilateral Filter, you must use the following lines in the host code:

#include “features/xf_harris.hpp” //for Harris Corner Detector

#include “imgproc/xf_bilateral_filter.hpp” //for Bilateral Filter

#include “video/xf_kalmanfilter.hpp”

After the headers are included, you can work with the library functions as described in the Vitis vision Library API Reference using the examples in the examples folder as reference.

The following table gives the name of the header file, including the folder name, which contains the library function.

| Function Name | File Path in the include folder |

|---|---|

| xf::cv::accumulate | imgproc/xf_accumulate_image.hpp |

| xf::cv::accumulateSquare | imgproc/xf_accumulate_squared.hpp |

| xf::cv::accumulateWeighted | imgproc/xf_accumulate_weighted.hp p |

| xf::cv::absdiff, xf::cv::add, xf::cv::subtract, xf::cv::bitwise_and, xf::cv::bitwise_or, xf::cv::bitwise_not, xf::cv::bitwise_xor,xf::cv::multiply ,xf::cv::Max, xf::cv::Min,xf::cv::compare, xf::cv::zero, xf::cv::addS, xf::cv::SubS, xf::cv::SubRS ,xf::cv::compareS, xf::cv::MaxS, xf::cv::MinS, xf::cv::set | core/xf_arithm.hpp |

| xf::cv::addWeighted | imgproc/xf_add_weighted.hpp |

| xf::cv::autowhitebalance | imgproc/xf_autowhitebalance.hpp |

| xf::cv::autoexposurecorrection | imgproc/xf_aec.hpp |

| xf::cv::bilateralFilter | imgproc/xf_bilaterealfilter.hpp |

| xf::cv::blackLevelCorrection | imgproc/xf_black_level.hpp |

| xf::cv::bfmatcher | imgproc/xf_bfmatcher.hpp |

| xf::cv::boxFilter | imgproc/xf_box_filter.hpp |

| xf::cv::boundingbox | imgproc/xf_boundingbox.hpp |

| xf::cv::badpixelcorrection | imgproc/xf_bpc.hpp |

| xf::cv::Canny | imgproc/xf_canny.hpp |

| xf::cv::colorcorrectionmatrix | imgproc/xf_colorcorrectionmatrix. hpp |

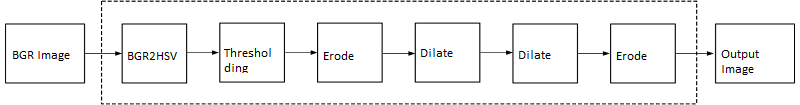

| xf::cv::Colordetect | imgproc/xf_colorthresholding.hpp, imgproc/xf_bgr2hsv.hpp, imgproc/xf_erosion.hpp, imgproc/xf_dilation.hpp |

| xf::cv::merge | imgproc/xf_channel_combine.hpp |

| xf::cv::extractChannel | imgproc/xf_channel_extract.hpp |

| xf::cv::ccaCustom | imgproc/xf_cca_custom.hpp |

| xf::cv::clahe | imgproc/xf_clahe.hpp |

| xf::cv::convertTo | imgproc/xf_convert_bitdepth.hpp |

| xf::cv::crop | imgproc/xf_crop.hpp |

| xf::cv::distanceTransform | imgproc/xf_distancetransform.hpp |

| xf::cv::nv122iyuv, xf::cv::nv122rgba, xf::cv::nv122yuv4, xf::cv::nv212iyuv, xf::cv::nv212rgba, xf::cv::nv212yuv4, xf::cv::rgba2yuv4, xf::cv::rgba2iyuv, xf::cv::rgba2nv12, xf::cv::rgba2nv21, xf::cv::uyvy2iyuv, xf::cv::uyvy2nv12, xf::cv::uyvy2rgba, xf::cv::yuyv2iyuv, xf::cv::yuyv2nv12, xf::cv::yuyv2rgba, xf::cv::rgb2iyuv,xf::cv::rgb2nv12, xf::cv::rgb2nv21, xf::cv::rgb2yuv4, xf::cv::rgb2uyvy, xf::cv::rgb2yuyv, xf::cv::rgb2bgr, xf::cv::bgr2uyvy, xf::cv::bgr2yuyv, xf::cv::bgr2rgb, xf::cv::bgr2nv12, xf::cv::bgr2nv21, xf::cv::iyuv2nv12, xf::cv::iyuv2rgba, xf::cv::iyuv2rgb, xf::cv::iyuv2yuv4, xf::cv::nv122uyvy, xf::cv::nv122yuyv, xf::cv::nv122nv21, xf::cv::nv212rgb, xf::cv::nv212bgr, xf::cv::nv212uyvy, xf::cv::nv212yuyv, xf::cv::nv212nv12, xf::cv::uyvy2rgb, xf::cv::uyvy2bgr, xf::cv::uyvy2yuyv, xf::cv::yuyv2rgb, xf::cv::yuyv2bgr, xf::cv::yuyv2uyvy, xf::cv::rgb2gray, xf::cv::bgr2gray, xf::cv::gray2rgb, xf::cv::gray2bgr, xf::cv::rgb2xyz, xf::cv::bgr2xyz… | imgproc/xf_cvt_color.hpp |

| xf::cv::densePyrOpticalFlow | video/xf_pyr_dense_optical_flow.h pp |

| xf::cv::DenseNonPyrLKOpticalFlow | video/xf_dense_npyr_optical_flow. hpp |

| xf::cv::dilate | imgproc/xf_dilation.hpp |

| xf::cv::demosaicing | imgproc/xf_demosaicing.hpp |

| xf::cv::erode | imgproc/xf_erosion.hpp |

| xf::cv::fast | features/xf_fast.hpp |

| xf::cv::filter2D | imgproc/xf_custom_convolution.hpp |

| xf::cv::flip | features/xf_flip.hpp |

| xf::cv::GaussianBlur | imgproc/xf_gaussian_filter.hpp |

| xf::cv::gaincontrol | imgproc/xf_gaincontrol.hpp |

| xf::cv::gammacorrection | imgproc/xf_gammacorrection.hpp |

| xf::cv::gtm | imgproc/xf_gtm.hpp |

| xf::cv::cornerHarris | features/xf_harris.hpp |

| xf::cv::calcHist | imgproc/xf_histogram.hpp |

| xf::cv::equalizeHist | imgproc/xf_hist_equalize.hpp |

| xf::cv::extractExposureFrames | imgproc/xf_extract_eframes.hpp |

| xf::cv::HDRMerge_bayer | imgproc/xf_hdrmerge.hpp |

| xf::cv::HOGDescriptor | imgproc/xf_hog_descriptor.hpp |

| xf::cv::Houghlines | imgproc/xf_houghlines.hpp |

| xf::cv::inRange | imgproc/xf_inrange.hpp |

| xf::cv::integralImage | imgproc/xf_integral_image.hpp |

| xf::cv::KalmanFilter | video/xf_kalmanfilter.hpp |

| xf::cv::Lscdistancebased | imgproc/xf_lensshadingcorrection .hpp |

| xf::cv::LTM::process | imgproc/xf_ltm.hpp |

| xf::cv::LUT | imgproc/xf_lut.hpp |

| xf::cv::magnitude | core/xf_magnitude.hpp |

| xf::cv::MeanShift | imgproc/xf_mean_shift.hpp |

| xf::cv::meanStdDev | core/xf_mean_stddev.hpp |

| xf::cv::medianBlur | imgproc/xf_median_blur.hpp |

| xf::cv::minMaxLoc | core/xf_min_max_loc.hpp |

| xf::cv::modefilter | imgproc/xf_modefilter.hpp |

| xf::cv::OtsuThreshold | imgproc/xf_otsuthreshold.hpp |

| xf::cv::phase | core/xf_phase.hpp |

| xf::cv::preProcess | dnn/xf_pre_process.hpp |

| xf::cv::paintmask | imgproc/xf_paintmask.hpp |

| xf::cv::pyrDown | imgproc/xf_pyr_down.hpp |

| xf::cv::pyrUp | imgproc/xf_pyr_up.hpp |

| xf::cv::xf_QuatizationDithering | imgproc/xf_quantizationdithering .hpp |

| xf::cv::reduce | imgrpoc/xf_reduce.hpp |

| xf::cv::remap | imgproc/xf_remap.hpp |

| xf::cv::resize | imgproc/xf_resize.hpp |

| xf::cv::rgbir2bayer | imgproc/xf_rgbir.hpp |

| xf::cv::convertScaleAbs | imgproc/xf_convertscaleabs.hpp |

| xf::cv::Scharr | imgproc/xf_scharr.hpp |

| xf::cv::SemiGlobalBM | imgproc/xf_sgbm.hpp |

| xf::cv::Sobel | imgproc/xf_sobel.hpp |

| xf::cv::StereoPipeline | imgproc/xf_stereo_pipeline.hpp |

| xf::cv::sum | imgproc/xf_sum.hpp |

| xf::cv::StereoBM | imgproc/xf_stereoBM.hpp |

| xf::cv::SVM | imgproc/xf_svm.hpp |

| xf::cv::lut3d | imgproc/xf_3dlut.hpp |

| xf::cv::Threshold | imgproc/xf_threshold.hpp |

| xf::cv::warpTransform | imgproc/xf_warp_transform.hpp |

Changing the Hardware Kernel Configuration¶

To modify the configuration of any function, update the following file:

<path to vitis vision git folder>/vision/L1/examples/<function>/build/xf_config_params.h .

Using the Vitis vision Library Functions on Hardware¶

The following table lists the Vitis vision library functions and the command to run the respective examples on hardware. It is assumed that your design is completely built and the board has booted up correctly.

| Example | Function Name | Usage on Hardware |

|---|---|---|

| accumulate | xf::cv::accumulate | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| accumulatesq uared | xf::cv::accumulateSquare | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| accumulatewe ighted | xf::cv::accumulateWeighted | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| addS | xf::cv::addS | ./<executable name>.elf <path to input image> |

| arithm | xf::cv::absdiff, xf::cv::subtract, xf::cv::bitwise_and, xf::cv::bitwise_or, xf::cv::bitwise_not, xf::cv::bitwise_xor | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| addweighted | xf::cv::addWeighted | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| Autoexposure correction | xf::cv::autoexposurecorr ection | ./<executable name>.elf <path to input image> |

| Autowhite balance | xf::cv::autowhitebalance | ./<executable name>.elf <path to input image> |

| Bilateralfil ter | xf::cv::bilateralFilter | ./<executable name>.elf <path to input image> |

| BlackLevel Correction | xf::cv::blackLevel Correction | ./<executable name>.elf <path to input image> |

| BruteForce | xf::cv::bfmatcher | ./<executable name>.elf <path to input image> |

| Boxfilter | xf::cv::boxFilter | ./<executable name>.elf <path to input image> |

| Badpixelcorr ection | xf::cv::badpixelcorrection | ./<executable name>.elf <path to input image> |

| Boundingbox | xf::cv::boundingbox | ./<executable name>.elf <path to input image> <No of ROI’s> |

| Canny | xf::cv::Canny | ./<executable name>.elf <path to input image> |

| ccaCustom | xf::cv::ccaCustom | ./<executable name>.elf <path to input image> |

| channelcombi ne | xf::cv::merge | ./<executable name>.elf <path to input image 1> <path to input image 2> <path to input image 3> <path to input image 4> |

| Channelextra ct | xf::cv::extractChannel | ./<executable name>.elf <path to input image> |

| CLAHE | xf::cv::clahe | ./<executable name>.elf <path to input image> |

| Colordetect | xf::cv::bgr2hsv, xf::cv::colorthresholding, xf::cv:: erode, xf::cv:: dilate | ./<executable name>.elf <path to input image> |

| color correction matrix | xf::cv::colorcorrection matrix | ./<executable name>.elf <path to input image> |

| compare | xf::cv::compare | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| compareS | xf::cv::compareS | ./<executable name>.elf <path to input image> |

| Convertbitde pth | xf::cv::convertTo | ./<executable name>.elf <path to input image> |

| convertScale Abs | xf::cv::convertScaleAbs | ./<executable name>.elf <path to input image> |

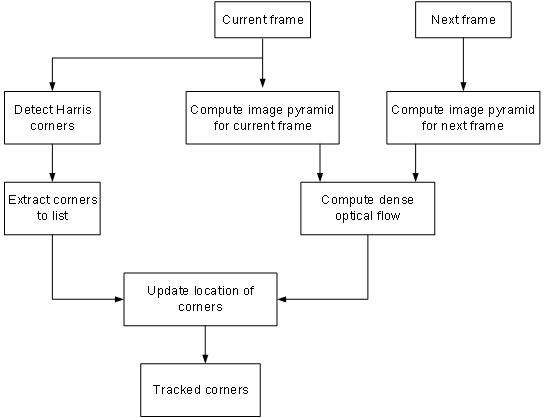

| Cornertracke r | xf::cv::cornerTracker | ./exe <input video> <no. of frames> <Harris Threshold> <No. of frames after which Harris Corners are Reset> |

| crop | xf::cv::crop | ./<executable name>.elf <path to input image> |

| Customconv | xf::cv::filter2D | ./<executable name>.elf <path to input image> |

| cvtcolor IYUV2NV12 | xf::cv::iyuv2nv12 | ./<executable name>.elf <path to input image 1> <path to input image 2> <path to input image 3> |

| cvtcolor IYUV2RGBA | xf::cv::iyuv2rgba | ./<executable name>.elf <path to input image 1> <path to input image 2> <path to input image 3> |

| cvtcolor IYUV2YUV4 | xf::cv::iyuv2yuv4 | ./<executable name>.elf <path to input image 1> <path to input image 2> <path to input image 3> <path to input image 4> <path to input image 5> <path to input image 6> |

| cvtcolor NV122IYUV | xf::cv::nv122iyuv | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| cvtcolor NV122RGBA | xf::cv::nv122rgba | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| cvtcolor NV122YUV4 | xf::cv::nv122yuv4 | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| cvtcolor NV212IYUV | xf::cv::nv212iyuv | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| cvtcolor NV212RGBA | xf::cv::nv212rgba | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| cvtcolor NV212YUV4 | xf::cv::nv212yuv4 | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| cvtcolor RGBA2YUV4 | xf::cv::rgba2yuv4 | ./<executable name>.elf <path to input image> |

| cvtcolor RGBA2IYUV | xf::cv::rgba2iyuv | ./<executable name>.elf <path to input image> |

| cvtcolor RGBA2NV12 | xf::cv::rgba2nv12 | ./<executable name>.elf <path to input image> |

| cvtcolor RGBA2NV21 | xf::cv::rgba2nv21 | ./<executable name>.elf <path to input image> |

| cvtcolor UYVY2IYUV | xf::cv::uyvy2iyuv | ./<executable name>.elf <path to input image> |

| cvtcolor UYVY2NV12 | xf::cv::uyvy2nv12 | ./<executable name>.elf <path to input image> |

| cvtcolor UYVY2RGBA | xf::cv::uyvy2rgba | ./<executable name>.elf <path to input image> |

| cvtcolor YUYV2IYUV | xf::cv::yuyv2iyuv | ./<executable name>.elf <path to input image> |

| cvtcolor YUYV2NV12 | xf::cv::yuyv2nv12 | ./<executable name>.elf <path to input image> |

| cvtcolor YUYV2RGBA | xf::cv::yuyv2rgba | ./<executable name>.elf <path to input image> |

| Demosaicing | xf::cv::demosaicing | ./<executable name>.elf <path to input image> |

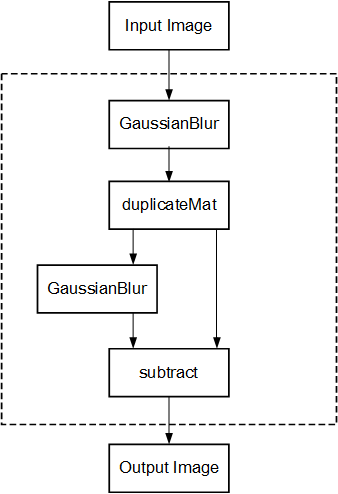

| Difference of Gaussian | xf::cv::GaussianBlur, xf::cv::duplicateMat, and xf::cv::subtract | ./<exe-name>.elf <path to input image> |

| Dilation | xf::cv::dilate | ./<executable name>.elf <path to input image> |

| Distance Transform | xf::cv::distanceTransform | ./<executable name>.elf <path to input image> |

| Erosion | xf::cv::erode | ./<executable name>.elf <path to input image> |

| FAST | xf::cv::fast | ./<executable name>.elf <path to input image> |

| Flip | xf::cv::flip | ./<executable name>.elf <path to input image> |

| Gaussianfilt er | xf::cv::GaussianBlur | ./<executable name>.elf <path to input image> |

| Gaincontrol | xf::cv::gaincontrol | ./<executable name>.elf <path to input image> |

| Gammacorrec tion | xf::cv::gammacorrection | ./<executable name>.elf <path to input image> |

| Global Tone Mapping | xf::cv::gtm | ./<executable name>.elf <path to input image> |

| Harris | xf::cv::cornerHarris | ./<executable name>.elf <path to input image> |

| Histogram | xf::cv::calcHist | ./<executable name>.elf <path to input image> |

| Histequializ e | xf::cv::equalizeHist | ./<executable name>.elf <path to input image> |

| Hog | xf::cv::HOGDescriptor | ./<executable name>.elf <path to input image> |

| Houghlines | xf::cv::HoughLines | ./<executable name>.elf <path to input image> |

| inRange | xf::cv::inRange | ./<executable name>.elf <path to input image> |

| Integralimg | xf::cv::integralImage | ./<executable name>.elf <path to input image> |

| Laplacian Filter | xf::cv::filter2d | ./<executable name>.elf <path to input image> |

| Lkdensepyrof | xf::cv::densePyrOpticalFlo w | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| Lknpyroflow | xf::cv::DenseNonPyr LKOpticalFlow | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| lensshading correction | xf::cv::Lscdistancebased | ./<executable name>.elf <path to input image> |

| Lut | xf::cv::LUT | ./<executable name>.elf <path to input image> |

| Local tone mapping | xf::cv::LTM::process | ./<executable name>.elf <path to input image> |

| Kalman Filter | xf::cv::KalmanFilter | ./<executable name>.elf |

| Magnitude | xf::cv::magnitude | ./<executable name>.elf <path to input image> |

| Max | xf::cv::Max | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| MaxS | xf::cv::MaxS | ./<executable name>.elf <path to input image> |

| meanshifttra cking | xf::cv::MeanShift | ./<executable name>.elf <path to input video/input image files> <Number of objects to track> |

| meanstddev | xf::cv::meanStdDev | ./<executable name>.elf <path to input image> |

| medianblur | xf::cv::medianBlur | ./<executable name>.elf <path to input image> |

| Min | xf::cv::Min | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| MinS | xf::cv::MinS | ./<executable name>.elf <path to input image> |

| Minmaxloc | xf::cv::minMaxLoc | ./<executable name>.elf <path to input image> |

| Mode filter | xf::cv::modefilter | ./<executable name>.elf <path to input image> |

| otsuthreshol d | xf::cv::OtsuThreshold | ./<executable name>.elf <path to input image> |

| paintmask | xf::cv::paintmask | ./<executable name>.elf <path to input image> |

| Phase | xf::cv::phase | ./<executable name>.elf <path to input image> |

| Pyrdown | xf::cv::pyrDown | ./<executable name>.elf <path to input image> |

| Pyrup | xf::cv::pyrUp | ./<executable name>.elf <path to input image> |

| Quantization Dithering | xf::cv::xf_Quatization Dithering | ./<executable name>.elf <path to input image> |

| reduce | xf::cv::reduce | ./<executable name>.elf <path to input image> |

| remap | xf::cv::remap | ./<executable name>.elf <path to input image> <path to mapx data> <path to mapy data> |

| Resize | xf::cv::resize | ./<executable name>.elf <path to input image> |

| rgbir2bayer | xf::cv::rgbir2bayer | ./<executable name>.elf <path to input image> |

| scharrfilter | xf::cv::Scharr | ./<executable name>.elf <path to input image> |

| set | xf::cv::set | ./<executable name>.elf <path to input image> |

| SemiGlobalBM | xf::cv::SemiGlobalBM | ./<executable name>.elf <path to left image> <path to right image> |

| sobelfilter | xf::cv::Sobel | ./<executable name>.elf <path to input image> |

| stereopipeli ne | xf::cv::StereoPipeline | ./<executable name>.elf <path to left image> <path to right image> |

| stereolbm | xf::cv::StereoBM | ./<executable name>.elf <path to left image> <path to right image> |

| subRS | xf::cv::SubRS | ./<executable name>.elf <path to input image> |

| subS | xf::cv::SubS | ./<executable name>.elf <path to input image> |

| sum | xf::cv::sum | ./<executable name>.elf <path to input image 1> <path to input image 2> |

| Svm | xf::cv::SVM | ./<executable name>.elf |

| threshold | xf::cv::Threshold | ./<executable name>.elf <path to input image> |

| 3dlut | xf::cv::lut3d | ./<executable name>.elf <path to input image> |

| warptransfor m | xf::cv::warpTransform | ./<executable name>.elf <path to input image> |

| zero | xf::cv::zero | ./<executable name>.elf <path to input image> |

Getting Started with HLS¶

The Vitis vision library can be used to build applications in Vitis HLS. This section of the document provides steps on how to run a single library component through the Vitis HLS 2021.2 flow which includes, C-simulation, C-synthesis, C/RTL co-simulation, and exporting the RTL as an IP.

All the functions under L1 folder of the Vitis Vision library can be built through Vitis HLS flow in the following two modes:

- Tcl Script Mode

- GUI Mode

Tcl Script Mode

Each configuration of all functions in L1 are provided with TCL script which can be run through the available Makefile.

Open a terminal and run the following commands to set the environment and build :

source < path-to-Vitis-installation-directory >/settings64.sh

source < part-to-XRT-installation-directory >/setup.sh

export DEVICE=< path-to-platform-directory >/< platform >.xpfm

export OPENCV_INCLUDE=< path-to-opencv-include-folder >

export OPENCV_LIB=< path-to-opencv-lib-folder >

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:< path-to-opencv-lib-folder >

make run CSIM=1 CSYNTH=1 COSIM=1 VIVADO_IMPL=1

GUI Mode

Use the following steps to operate the HLS Standalone Mode using GUI:

- Open a terminal and update the LD_LIBRARY_PATH to point to OpenCV lib folder.

- From the same terminal, open Vitis HLS in GUI mode and create a new project

- Specify the name of the project. For example - Dilation.

- Click Browse to enter a workspace folder used to store your projects.

- Click Next.

- Under the source files section, add the accel.cpp file which can be found in the examples folder. Also, fill the top function name (here it is dilation_accel).

- Click Next.

- Under the test bench section add tb.cpp.

- Click Next.

- Select the clock period to the required value (10ns in example).

- Select the suitable part. For example,

xczu9eg-ffvb1156-2-i. - Click Finish.

- Right click on the created project and select Project Settings.

- In the opened tab, select Simulation.

- Files added under the Test Bench section will be displayed. Select a file and click Edit CFLAGS.

- Enter

-I<path-to-L1-include-directory> -std=c++0x -I<path-to-opencv-include-folder>. - In the Linker Flags section, enter the opencv libs and path to the opencv libs

-L<path-to-opencv-lib-folder> -lopencv_core -lopencv_imgcodecs -lopencv_imgproc - Select Synthesis and repeat the above step for all the displayed files. Do not add opencv include path here.

- Click OK.

- Run the C Simulation, select Clean Build and specify the required input arguments.

- Click OK.

- All the generated output files/images will be present in the solution1->csim->build.

- Run C synthesis.

- Run co-simulation by specifying the proper input arguments.

- The status of co-simulation can be observed on the console.

Constraints for Co-simulation

There are few limitations in performing co-simulation of the Vitis vision functions. They are:

- Functions with multiple accelerators are not supported.

- Compiler and simulator are default in HLS (gcc, xsim).

- Since HLS does not support multi-kernel integration, the current flow also does not support multi-kernel integration. Hence, the Pyramidal Optical flow and Canny Edge Detection functions and examples are not supported in this flow.

- The maximum image size (HEIGHT and WIDTH) set in config.h file should be equal to the actual input image size.

AXI Video Interface Functions¶

Vitis vision has functions that will transform the xf::cv::Mat into Xilinx®

Video Streaming interface and vice-versa. xf::cv::AXIvideo2xfMat() and

xf::cv::xfMat2AXIVideo() act as video interfaces to the IPs of the

Vitis vision functions in the Vivado® IP integrator.

cvMat2AXIvideoxf<NPC> and AXIvideo2cvMatxf<NPC>

are used on the host side.

An example function, ‘axiconv’, depicting the usage of these functions is provided in the L1/examples directory.

| Video Library Function | Description |

|---|---|

| AXIvideo2xfMat | Converts data from an AXI4 video stream representation to xf::cv::Mat format. |

| xfMat2AXIvideo | Converts data stored as xf::cv::Mat format to an AXI4 video stream. |

| cvMat2AXIvideoxf | Converts data stored as cv::Mat format to an AXI4 video stream |

| AXIvideo2cvMatxf | Converts data from an AXI4 video stream representation to cv::Mat format. |

AXIvideo2xfMat¶

The AXIvideo2xfMat function receives a sequence of images using the

AXI4 Streaming Video and produces an xf::cv::Mat representation.

API Syntax

template<int W,int T,int ROWS, int COLS,int NPC,int XFCVDEPTH = _XFCVDEPTH_DEFAULT>

int AXIvideo2xfMat(hls::stream< ap_axiu<W,1,1,1> >& AXI_video_strm, xf::cv::Mat<T,ROWS, COLS, NPC, XFCVDEPTH>& img)

Parameter Descriptions

The following table describes the template and the function parameters.

| Parameter | Description |

|---|---|

| W | Data width of AXI4-Stream. Recommended value is pixel depth. |

| T | Pixel type of the image. 1 channel (XF_8UC1). Data width of pixel must be no greater than W. |

| ROWS | Maximum height of input image. |

| COLS | Maximum width of input image. |

| NPC | Number of pixels to be processed per cycle. Possible options are XF_NPPC1 and XF_NPPC8 for 1-pixel and 8-pixel operations respectively. |

| XFCVDEPTH | Depth of the Input image. |

| AXI_video_strm | HLS stream of ap_axiu (axi protocol) type. |

| img | Input image. |

This function will return bit error of ERROR_IO_EOL_EARLY( 1 ) or ERROR_IO_EOL_LATE( 2 ) to indicate an unexpected line length, by detecting TLAST input.

For more information about AXI interface see UG761.

xfMat2AXIvideo¶

The Mat2AXI video function receives an xf::cv::Mat representation of a

sequence of images and encodes it correctly using the AXI4 Streaming

video protocol.

API Syntax

template<int W, int T, int ROWS, int COLS,int NPC,int XFCVDEPTH = _XFCVDEPTH_DEFAULT>

int xfMat2AXIvideo(xf::cv::Mat<T,ROWS, COLS,NPC, XFCVDEPTH>& img,hls::stream<ap_axiu<W,1,1,1> >& AXI_video_strm)

Parameter Descriptions

The following table describes the template and the function parameters.

| Parameter | Description |

|---|---|

| W | Data width of AXI4-Stream. Recommended value is pixel depth. |

| T | Pixel type of the image. 1 channel (XF_8UC1). Data width of pixel must be no greater than W. |

| ROWS | Maximum height of input image. |

| COLS | Maximum width of input image. |

| NPC | Number of pixels to be processed per cycle. Possible options are XF_NPPC1 and XF_NPPC8 for 1-pixel and 8-pixel operations respectively. |

| XFCVDEPTH | Depth of the Output image. |

| AXI_video_strm | HLS stream of ap_axiu (axi protocol) type. |

| img | Output image. |

This function returns the value 0.

Note: The NPC values across all the functions in a data flow must follow the same value. If there is mismatch it throws a compilation error in HLS.

cvMat2AXIvideoxf¶

The cvMat2Axivideoxf function receives image as cv::Mat

representation and produces the AXI4 streaming video of image.

API Syntax

template<int NPC,int W>

void cvMat2AXIvideoxf(cv::Mat& cv_mat, hls::stream<ap_axiu<W,1,1,1> >& AXI_video_strm)

Parameter Descriptions

The following table describes the template and the function parameters.

| Parameter | Description |

|---|---|

| W | Data width of AXI4-Stream. Recommended value is pixel depth. |

| NPC | Number of pixels to be processed per cycle. Possible options are XF_NPPC1 and XF_NPPC8 for 1-pixel and 8-pixel operations respectively. |

| AXI_video_strm | HLS stream of ap_axiu (axi protocol) type. |

| cv_mat | Input image. |

AXIvideo2cvMatxf¶

The Axivideo2cvMatxf function receives image as AXI4 streaming video

and produces the cv::Mat representation of image

API Syntax

template<int NPC,int W>

void AXIvideo2cvMatxf(hls::stream<ap_axiu<W,1,1,1> >& AXI_video_strm, cv::Mat& cv_mat)

Parameter Descriptions

The following table describes the template and the function parameters.

| Parameter | Description |

|---|---|

| W | Data width of AXI4-Stream. Recommended value is pixel depth. |

| NPC | Number of pixels to be processed per cycle. Possible options are XF_NPPC1 and XF_NPPC8 for 1-pixel and 8-pixel operations respectively. |

| AXI_video_strm | HLS stream of ap_axiu (axi protocol) type. |

| cv_mat | Output image. |

Migrating HLS Video Library to Vitis vision¶

The HLS video library has been deprecated. All the functions and most of the infrastructure available in HLS video library are now available in Vitis vision with their names changed and some modifications. These HLS video library functions ported to Vitis vision supports build flow also.

This section provides the details on using the C++ video processing functions and the infrastructure present in HLS video library.

Infrastructure Functions and Classes¶

All the functions imported from HLS video library now take xf::cv::Mat (in sync with Vitis vision library) to represent image data instead of hls::Mat. The main difference between these two is that the hls::Mat uses hls::stream to store the data whereas xf::cv::Mat uses a pointer. Therefore, hls:: Mat cannot be exactly replaced with xf::cv::Mat for migrating.

Below table summarizes the differences between member functions of hls::Mat to xf::cv::Mat.

| Member Function | hls::Mat (HLS Video lib) | xf::cv::Mat (Vitis vision lib) |

|---|---|---|

| channels() | Returns the number of channels | Returns the number of channels |

| type() | Returns the enum value of pixel type | Returns the enum value of pixel type |

| depth() | Returns the enum value of pixel type | Returns the depth of pixel including channels |

| read() | Readout a value and return it as a scalar from stream | Readout a value from a given location and return it as a packed (for multi-pixel/clock) value. |

| operator >> | Similar to read() | Not available in Vitis vision |

| operator << | Similar to write() | Not available in Vitis vision |

| Write() | Write a scalar value into the stream | Writes a packed (for multi-pixel/clock) value into the given location. |

Infrastructure files available in HLS Video Library hls_video_core.hpp, hls_video_mem.hpp, hls_video_types.hpp are moved to xf_video_core.hpp, xf_video_mem.hpp, xf_video_types.hpp in Vitis vision Library and hls_video_imgbase.hpp is deprecated. Code inside these files unchanged except that these are now under xf::cv::namespace.

Classes¶

- Memory Window Buffer

- hls::window is now xf::cv::window. No change in the implementation, except the namespace change. This is located in “xf_video_mem.h” file.

- Memory Line Buffer

- hls::LineBuffer is now xf::cv::LineBuffer. No difference between the two, except xf::cv::LineBuffer has extra template arguments for inferring different types of RAM structures, for the storage structure used. Default storage type is “RAM_S2P_BRAM” with RESHAPE_FACTOR=1. Complete description can be found here xf::cv::LineBuffer. This is located in xf_video_mem.hpp file.

Funtions¶

- OpenCV interface functions

- These functions covert image data of OpenCV Mat format to/from HLS AXI types. HLS Video Library had 14 interface functions, out of which, two functions are available in Vitis vision Library: cvMat2AXIvideo and AXIvideo2cvMat located in “xf_axi.h” file. The rest are all deprecated.

- AXI4-Stream I/O Functions

- The I/O functions which convert hls::Mat to/from AXI4-Stream compatible data type (hls::stream) are hls::AXIvideo2Mat, hls::Mat2AXIvideo. These functions are now deprecated and added 2 new functions xf::cv::AXIvideo2xfMat and xf::cv:: xfMat2AXIvideo to facilitate the xf::cv::Mat to/from conversion. To use these functions, the header file “xf_infra.hpp” must be included.

xf::cv::window¶

A template class to represent the 2D window buffer. It has three parameters to specify the number of rows, columns in window buffer and the pixel data type.

Class definition¶

template<int ROWS, int COLS, typename T>

class Window {

public:

Window()

/* Window main APIs */

void shift_pixels_left();

void shift_pixels_right();

void shift_pixels_up();

void shift_pixels_down();

void insert_pixel(T value, int row, int col);

void insert_row(T value[COLS], int row);

void insert_top_row(T value[COLS]);

void insert_bottom_row(T value[COLS]);

void insert_col(T value[ROWS], int col);

void insert_left_col(T value[ROWS]);

void insert_right_col(T value[ROWS]);

T& getval(int row, int col);

T& operator ()(int row, int col);

T val[ROWS][COLS];

#ifdef __DEBUG__

void restore_val();

void window_print();

T val_t[ROWS][COLS];

#endif

};

Parameter Descriptions¶

The following table lists the xf::cv::Window class members and their descriptions.

| Parameter | Description |

|---|---|

| Val | 2-D array to hold the contents of buffer. |

Member Function Description¶

| Function | Description |

|---|---|

| shift_pixels_left() | Shift the window left, that moves all stored data within the window right, leave the leftmost column (col = COLS-1) for inserting new data. |

| shift_pixels_right() | Shift the window right, that moves all stored data within the window left, leave the rightmost column (col = 0) for inserting new data. |

| shift_pixels_up() | Shift the window up, that moves all stored data within the window down, leave the top row (row = ROWS-1) for inserting new data. |

| shift_pixels_down() | Shift the window down, that moves all stored data within the window up, leave the bottom row (row = 0) for inserting new data. |

| insert_pixel(T value, int row, int col) | Insert a new element value at location (row, column) of the window. |

| insert_row(T value[COLS], int row) | Inserts a set of values in any row of the window. |

| insert_top_row(T value[COLS]) | Inserts a set of values in the top row = 0 of the window. |

| insert_bottom_row(T value[COLS]) | Inserts a set of values in the bottom row = ROWS-1 of the window. |

| insert_col(T value[ROWS], int col) | Inserts a set of values in any column of the window. |

| insert_left_col(T value[ROWS]) | Inserts a set of values in left column = 0 of the window. |

| insert_right_col(T value[ROWS]) | Inserts a set of values in right column = COLS-1 of the window. |

| T& getval(int row, int col) | Returns the data value in the window at position (row,column). |

| T& operator ()(int row, int col) | Returns the data value in the window at position (row,column). |

| restore_val() | Restore the contents of window buffer to another array. |

| window_print() | Print all the data present in window buffer onto console. |

Template Parameter Description¶

| Parameter | Description |

|---|---|

| ROWS | Number of rows in the window buffer. |

| COLS | Number of columns in the window buffer. |

| T | Data type of pixel in the window buffer. |

Sample code for window buffer declaration

Window<K_ROWS, K_COLS, unsigned char> kernel;

xf::cv::LineBuffer¶

A template class to represent 2D line buffer. It has three parameters to specify the number of rows, columns in window buffer and the pixel data type.

Class definition¶

template<int ROWS, int COLS, typename T, XF_ramtype_e MEM_TYPE=RAM_S2P_BRAM, int RESHAPE_FACTOR=1>

class LineBuffer {

public:

LineBuffer()

/* LineBuffer main APIs */

/* LineBuffer main APIs */

void shift_pixels_up(int col);

void shift_pixels_down(int col);

void insert_bottom_row(T value, int col);

void insert_top_row(T value, int col);

void get_col(T value[ROWS], int col);

T& getval(int row, int col);

T& operator ()(int row, int col);

/* Back compatible APIs */

void shift_up(int col);

void shift_down(int col);

void insert_bottom(T value, int col);

void insert_top(T value, int col);

T val[ROWS][COLS];

#ifdef __DEBUG__

void restore_val();

void linebuffer_print(int col);

T val_t[ROWS][COLS];

#endif

};

Parameter Descriptions¶

The following table lists the xf::cv::LineBuffer class members and their descriptions.

| Parameter | Description |

|---|---|

| Val | 2-D array to hold the contents of line buffer. |

Member Functions Description¶

| Function | Description |

|---|---|

| shift_pixels_up(int col) | Line buffer contents Shift up, new values will be placed in the bottom row=ROWS-1. |

| shift_pixels_down(int col) | Line buffer contents Shift down, new values will be placed in the top row=0. |

| insert_bottom_row(T value, int col) | Inserts a new value in bottom row= ROWS-1 of the line buffer. |

| insert_top_row(T value, int col) | Inserts a new value in top row=0 of the line buffer. |

| get_col(T value[ROWS], int col) | Get a column value of the line buffer. |

| T& getval(int row, int col) | Returns the data value in the line buffer at position (row, column). |

| T& operator ()(int row, int col); | Returns the data value in the line buffer at position (row, column). |

Template Parameter Description¶

| Parameter | Description |

|---|---|

| ROWS | Number of rows in line buffer. |

| COLS | Number of columns in line buffer. |

| T | Data type of pixel in line buffer. |

| MEM_TYPE | Type of storage element. It takes one of the following enumerated values: RAM_1P_BRAM, RAM_1P_URAM, RAM_2P_BRAM, RAM_2P_URAM, RAM_S2P_BRAM, RAM_S2P_URAM, RAM_T2P_BRAM, RAM_T2P_URAM. |

| RESHAPE_FACTOR | Specifies the amount to divide an array. |

Sample code for line buffer declaration:

LineBuffer<3, 1920, XF_8UC3, RAM_S2P_URAM,1> buff;

Video Processing Functions¶

The following table summarizes the video processing functions ported from HLS Video Library into Vitis vision Library along with the API modifications.

| Functions | HLS Video Library -API | xfOpenCV Library-API |

|---|---|---|

| addS | template<int ROWS, int COLS, int SRC_T, typename _T, int DST_T> void AddS(Mat<ROWS, COLS, SRC_T>&src,Scalar<HLS_MAT_CN(SRC_T), _T> scl, Mat<ROWS, COLS, DST_T>& dst) |

template<int POLICY_TYPE, int SRC_T, int ROWS, int COLS, int NPC =1> void addS(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src1, unsigned char _scl[XF_CHANNELS(SRC_T,NPC)],xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst) |

| AddWeighted | template<int ROWS, int COLS, int SRC1_T, int SRC2_T, int DST_T, typename P_T> void AddWeighted(Mat<ROWS, COLS, SRC1_T>& src1,P_T alpha,Mat<ROWS, COLS, SRC2_T>& src2,P_T beta, P_T gamma,Mat<ROWS, COLS, DST_T>& dst) |

template< int SRC_T,int DST_T, int ROWS, int COLS, int NPC = 1> void addWeighted(xf::Mat<SRC_T, ROWS, COLS, NPC> & src1,float alpha, xf::Mat<SRC_T, ROWS, COLS, NPC> & src2,float beta, float gama, xf::Mat<DST_T, ROWS, COLS, NPC> & dst) |

| Cmp | template<int ROWS, int COLS, int SRC1_T, int SRC2_T, int DST_T> void Cmp(Mat<ROWS, COLS, SRC1_T>& src1,Mat<ROWS, COLS, SRC2_T>& src2, Mat<ROWS, COLS, DST_T>& dst,int cmp_op) |

template<int CMP_OP, int SRC_T, int ROWS, int COLS, int NPC =1> void compare(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src1, xf::Mat<SRC_T, ROWS, COLS, NPC> & _src2,xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst) |

| CmpS | template<int ROWS, int COLS, int SRC_T, typename P_T, int DST_T> void CmpS(Mat<ROWS, COLS, SRC_T>& src, P_T value, Mat<ROWS, COLS, DST_T>& dst, int cmp_op) |

template<int CMP_OP, int SRC_T, int ROWS, int COLS, int NPC =1> void compare(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src1, unsigned char _scl[XF_CHANNELS(SRC_T,NPC)],xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst) |

| Max | template<int ROWS, int COLS, int SRC1_T, int SRC2_T, int DST_T> void Max(Mat<ROWS, COLS, SRC1_T>& src1, Mat<ROWS, COLS, SRC2_T>& src2, Mat<ROWS, COLS, DST_T>& dst) |

template<int SRC_T, int ROWS, int COLS, int NPC =1> void Max(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src1, xf::Mat<SRC_T, ROWS, COLS, NPC> & _src2,xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst) |

| MaxS | template<int ROWS, int COLS, int SRC_T, typename _T, int DST_T> void MaxS(Mat<ROWS, COLS, SRC_T>& src, _T value, Mat<ROWS, COLS, DST_T>& dst) |

template< int SRC_T, int ROWS, int COLS, int NPC =1> void max(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src1, unsigned char _scl[XF_CHANNELS(SRC_T,NPC)],xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst) |

| Min | template<int ROWS, int COLS, int SRC1_T, int SRC2_T, int DST_T> void Min(Mat<ROWS, COLS, SRC1_T>& src1, Mat<ROWS, COLS, SRC2_T>& src2, Mat<ROWS, COLS, DST_T>& dst) |

template< int SRC_T, int ROWS, int COLS, int NPC =1> void Min(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src1, xf::Mat<SRC_T, ROWS, COLS, NPC> & _src2,xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst) |

| MinS | template<int ROWS, int COLS, int SRC_T, typename _T, int DST_T> void MinS(Mat<ROWS, COLS, SRC_T>& src, _T value,Mat<ROWS, COLS, DST_T>& dst) |

template< int SRC_T, int ROWS, int COLS, int NPC =1> void min(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src1, unsigned char _scl[XF_CHANNELS(SRC_T,NPC)],xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst) |

| PaintMask | template<int SRC_T,int MASK_T,int ROWS,int COLS> void PaintMask( Mat<ROWS,COLS,SRC_T> &_src, Mat<ROWS,COLS,MASK_T>&_mask, Mat<ROWS,COLS,SRC_T>&_dst,Scalar<HLS_MAT_CN(SRC_T),HLS_TNAME(SRC_T)> _color) |

template< int SRC_T,int MASK_T, int ROWS, int COLS,int NPC=1> void paintmask(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src_mat, xf::Mat<MASK_T, ROWS, COLS, NPC> & in_mask, xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst_mat, unsigned char _color[XF_CHANNELS(SRC_T,NPC)]) |

| Reduce | template<typename INTER_SUM_T, int ROWS, int COLS, int SRC_T, int DST_ROWS, int DST_COLS, int DST_T> void Reduce( Mat<ROWS, COLS, SRC_T> &src, Mat<DST_ROWS, DST_COLS, DST_T> &dst, int dim, int op=HLS_REDUCE_SUM) |

template< int REDUCE_OP, int SRC_T,int DST_T, int ROWS, int COLS,int ONE_D_HEIGHT, int ONE_D_WIDTH, int NPC=1> void reduce(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src_mat, xf::Mat<DST_T, ONE_D_HEIGHT, ONE_D_WIDTH, 1> & _dst_mat, unsigned char dim) |

| Zero | template<int ROWS, int COLS, int SRC_T, int DST_T> void Zero(Mat<ROWS, COLS, SRC_T>& src, Mat<ROWS, COLS, DST_T>& dst) |

template< int SRC_T, int ROWS, int COLS, int NPC =1> void zero(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src1,xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst) |

| Sum | template<typename DST_T, int ROWS, int COLS, int SRC_T> Scalar<HLS_MAT_CN(SRC_T), DST_T> Sum( Mat<ROWS, COLS, SRC_T>& src) |

template< int SRC_T, int ROWS, int COLS, int NPC = 1> void sum(xf::Mat<SRC_T, ROWS, COLS, NPC> & src1, double sum[XF_CHANNELS(SRC_T,NPC)] ) |

| SubS | template<int ROWS, int COLS, int SRC_T, typename _T, int DST_T> void SubS(Mat<ROWS, COLS, SRC_T>& src, Scalar<HLS_MAT_CN(SRC_T), _T> scl, Mat<ROWS, COLS, DST_T>& dst) |

template<int POLICY_TYPE, int SRC_T, int ROWS, int COLS, int NPC =1> void SubS(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src1, unsigned char _scl[XF_CHANNELS(SRC_T,NPC)],xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst) |

| SubRS | template<int ROWS, int COLS, int SRC_T, typename _T, int DST_T> void SubRS(Mat<ROWS, COLS, SRC_T>& src, Scalar<HLS_MAT_CN(SRC_T), _T> scl, Mat<ROWS, COLS, DST_T>& dst) |

template<int POLICY_TYPE, int SRC_T, int ROWS, int COLS, int NPC =1> void SubRS(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src1, unsigned char _scl[XF_CHANNELS(SRC_T,NPC)],xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst) |

| Set | template<int ROWS, int COLS, int SRC_T, typename _T, int DST_T> void Set(Mat<ROWS, COLS, SRC_T>& src, Scalar<HLS_MAT_CN(SRC_T), _T> scl, Mat<ROWS, COLS, DST_T>& dst) |

template< int SRC_T, int ROWS, int COLS, int NPC =1> void set(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src1, unsigned char _scl[XF_CHANNELS(SRC_T,NPC)],xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst) |

| Absdiff | template<int ROWS, int COLS, int SRC1_T, int SRC2_T, int DST_T> void AbsDiff( Mat<ROWS, COLS, SRC1_T>& src1, Mat<ROWS, COLS, SRC2_T>& src2, Mat<ROWS, COLS, DST_T>& dst) |

template<int SRC_T, int ROWS, int COLS, int NPC =1> void absdiff(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src1,xf::Mat<SRC_T, ROWS, COLS, NPC> & _src2,xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst) |

| And | template<int ROWS, int COLS, int SRC1_T, int SRC2_T, int DST_T> void And( Mat<ROWS, COLS, SRC1_T>& src1, Mat<ROWS, COLS, SRC2_T>& src2, Mat<ROWS, COLS, DST_T>& dst) |

template<int SRC_T, int ROWS, int COLS, int NPC = 1> void bitwise_and(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src1, xf::Mat<SRC_T, ROWS, COLS, NPC> & _src2, xf::Mat<SRC_T, ROWS, COLS, NPC> &_dst) |

| Dilate | template<int Shape_type,int ITERATIONS,int SRC_T, int DST_T, typename KN_T,int IMG_HEIGHT,int IMG_WIDTH,int K_HEIGHT,int K_WIDTH> void Dilate(Mat<IMG_HEIGHT, IMG_WIDTH, SRC_T>&_src,Mat<IMG_HEIGHT, IMG_WIDTH, DST_T&_dst,Window<K_HEIGHT,K_WIDTH,KN_T>&_kernel) |

template<int BORDER_TYPE, int TYPE, int ROWS, int COLS,int K_SHAPE,int K_ROWS,int K_COLS, int ITERATIONS, int NPC=1> void dilate (xf::Mat<TYPE, ROWS, COLS, NPC> & _src, xf::Mat<TYPE, ROWS, COLS, NPC> & _dst,unsigned char _kernel[K_ROWS*K_COLS]) |

| Duplicate | template<int ROWS, int COLS, int SRC_T, int DST_T> void Duplicate(Mat<ROWS, COLS, SRC_T>& src,Mat<ROWS, COLS, DST_T>& dst1,Mat<ROWS, COLS, DST_T>& dst2) |

template<int SRC_T, int ROWS, int COLS,int NPC> void duplicateMat(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src, xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst1,xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst2) |

| EqualizeHist | template<int SRC_T, int DST_T,int ROW, int COL> void EqualizeHist(Mat<ROW, COL, SRC_T>&_src,Mat<ROW, COL, DST_T>&_dst) |

template<int SRC_T, int ROWS, int COLS, int NPC = 1> void equalizeHist(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src,xf::Mat<SRC_T, ROWS, COLS, NPC> & _src1,xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst) |

| erode | template<int Shape_type,int ITERATIONS,int SRC_T, int DST_T, typename KN_T,int IMG_HEIGHT,int IMG_WIDTH,int K_HEIGHT,int K_WIDTH> void Erode(Mat<IMG_HEIGHT, IMG_WIDTH, SRC_T>&_src,Mat<IMG_HEIGHT,IMG_WIDTH,DST_T>&_dst,Window<K_HEIGHT,K_WIDTH,KN_T>&_kernel) |

template<int BORDER_TYPE, int TYPE, int ROWS, int COLS,int K_SHAPE,int K_ROWS,int K_COLS, int ITERATIONS, int NPC=1> void erode (xf::Mat<TYPE, ROWS, COLS, NPC> & _src, xf::Mat<TYPE, ROWS, COLS, NPC> & _dst,unsigned char _kernel[K_ROWS*K_COLS]) |

| FASTX | template<int SRC_T,int ROWS,int COLS> void FASTX(Mat<ROWS,COLS,SRC_T> &_src, Mat<ROWS,COLS,HLS_8UC1>&_mask,HLS_TNAME(SRC_T)_threshold,bool _nomax_supression) |

template<int NMS,int SRC_T,int ROWS, int COLS,int NPC=1> void fast(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src_mat,xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst_mat,unsigned char _threshold) |

| Filter2D | template<int SRC_T, int DST_T, typename KN_T, typename POINT_T, int IMG_HEIGHT,int IMG_WIDTH,int K_HEIGHT,int K_WIDTH> void Filter2D(Mat<IMG_HEIGHT, IMG_WIDTH, SRC_T> &_src,Mat<IMG_HEIGHT, IMG_WIDTH, DST_T> &_dst,Window<K_HEIGHT,K_WIDTH,KN_T>&_kernel,Point_<POINT_T>anchor) |

template<int BORDER_TYPE,int FILTER_WIDTH,int FILTER_HEIGHT, int SRC_T,int DST_T, int ROWS, int COLS,int NPC> void filter2D(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src_mat,xf::Mat<DST_T, ROWS, COLS, NPC> & _dst_mat,short int filter[FILTER_HEIGHT*FILTER_WIDTH],unsigned char _shift) |

| GaussianBlur | template<int KH,int KW,typename BORDERMODE,int SRC_T,int DST_T,int ROWS,int COLS> void GaussianBlur(Mat<ROWS, COLS, SRC_T> &_src, Mat<ROWS, COLS, DST_T> &_dst,double sigmaX=0,double sigmaY=0) |

template<int FILTER_SIZE, int BORDER_TYPE, int SRC_T, int ROWS, int COLS,int NPC = 1> void GaussianBlur(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src, xf::Mat<SRC_T, ROWS, COLS, NPC> & _dst, float sigma) |

| Harris | template<int blockSize,int Ksize,typename KT,int SRC_T,int DST_T,int ROWS,int COLS> void Harris(Mat<ROWS, COLS, SRC_T> &_src,Mat<ROWS, COLS, DST_T>&_dst,KT k,int threshold |

template<int FILTERSIZE,int BLOCKWIDTH, int NMSRADIUS,int SRC_T,int ROWS, int COLS,int NPC=1,bool USE_URAM=false> void cornerHarris(xf::Mat<SRC_T, ROWS, COLS, NPC> & src,xf::Mat<SRC_T, ROWS, COLS, NPC> & dst,uint16_t threshold, uint16_t k) |

| CornerHarris | template<int blockSize,int Ksize,typename KT,int SRC_T,int DST_T,int ROWS,int COLS> void CornerHarris( Mat<ROWS, COLS, SRC_T>&_src,Mat<ROWS, COLS, DST_T>&_dst,KT k) |

template<int FILTERSIZE,int BLOCKWIDTH, int NMSRADIUS,int SRC_T,int ROWS, int COLS,int NPC=1,bool USE_URAM=false> void cornerHarris(xf::Mat<SRC_T, ROWS, COLS, NPC> & src,xf::Mat<SRC_T, ROWS, COLS, NPC> & dst,uint16_t threshold, uint16_t k |

| HoughLines2 | template<unsigned int theta,unsigned int rho,typename AT,typename RT,int SRC_T,int ROW,int COL,unsigned int linesMax> void HoughLines2(Mat<ROW,COL,SRC_T> &_src, Polar_<AT,RT> (&_lines)[linesMax],unsigned int threshold) |

template<unsigned int RHO,unsigned int THETA,int MAXLINES,int DIAG,int MINTHETA,int MAXTHETA,int SRC_T, int ROWS, int COLS,int NPC> void HoughLines(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src_mat,float outputrho[MAXLINES],float outputtheta[MAXLINES],short threshold,short linesmax) |

| Integral | template<int SRC_T, int DST_T, int ROWS,int COLS> void Integral(Mat<ROWS, COLS, SRC_T>&_src, Mat<ROWS+1, COLS+1, DST_T>&_sum ) |

template<int SRC_TYPE,int DST_TYPE, int ROWS, int COLS, int NPC> void integral(xf::Mat<SRC_TYPE, ROWS, COLS, NPC> & _src_mat, xf::Mat<DST_TYPE, ROWS, COLS, NPC> & _dst_mat) |

| Merge | template<int ROWS, int COLS, int SRC_T, int DST_T> void Merge( Mat<ROWS, COLS, SRC_T>& src0, Mat<ROWS, COLS, SRC_T>& src1, Mat<ROWS, COLS, SRC_T>& src2, Mat<ROWS, COLS, SRC_T>& src3, Mat<ROWS, COLS, DST_T>& dst) |

template<int SRC_T, int DST_T, int ROWS, int COLS, int NPC=1> void merge(xf::Mat<SRC_T, ROWS, COLS, NPC> &_src1, xf::Mat<SRC_T, ROWS, COLS, NPC> &_src2, xf::Mat<SRC_T, ROWS, COLS, NPC> &_src3, xf::Mat<SRC_T, ROWS, COLS, NPC> &_src4, xf::Mat<DST_T, ROWS, COLS, NPC> &_dst) |

| MinMaxLoc | template<int ROWS, int COLS, int SRC_T, typename P_T> void MinMaxLoc(Mat<ROWS, COLS, SRC_T>& src, P_T* min_val,P_T* max_val,Point& min_loc, Point& max_loc) |

template<int SRC_T,int ROWS,int COLS,int NPC=0> void minMaxLoc(xf::Mat<SRC_T, ROWS, COLS, NPC> & _src,int32_t *min_value, int32_t *max_value,uint16_t *_minlocx, uint16_t *_minlocy, uint16_t *_maxlocx, uint16_t *_maxlocy ) |

| Mul | template<int ROWS, int COLS, int SRC1_T, int SRC2_T, int DST_T> void Mul(Mat<ROWS, COLS, SRC1_T>& src1, Mat<ROWS, COLS, SRC2_T>& src2, Mat<ROWS, COLS, DST_T>& dst) |

template<int POLICY_TYPE, int SRC_T, int ROWS, int COLS, int NPC = 1> void multiply(xf::Mat<SRC_T, ROWS, COLS, NPC> & src1, xf::Mat<SRC_T, ROWS, COLS, NPC> & src2, xf::Mat<SRC_T, ROWS, COLS, NPC> & dst,float scale) |

| Not | template<int ROWS, int COLS, int SRC_T, int DST_T> void Not(Mat<ROWS, COLS, SRC_T>& src, Mat<ROWS, COLS, DST_T>& dst) |

template<int SRC_T, int ROWS, int COLS, int NPC = 1> void bitwise_not(xf::Mat<SRC_T, ROWS, COLS, NPC> & src, xf::Mat<SRC_T, ROWS, COLS, NPC> & dst) |

| Range | template<int ROWS, int COLS, int SRC_T, int DST_T, typename P_T> void Range(Mat<ROWS, COLS, SRC_T>& src, Mat<ROWS, COLS, DST_T>& dst, P_T start,P_T end) |

template<int SRC_T, int ROWS, int COLS,int NPC=1> void inRange(xf::Mat<SRC_T, ROWS, COLS, NPC> & src,unsigned char lower_thresh,unsigned char upper_thresh,xf::Mat<SRC_T, ROWS, COLS, NPC> & dst) |

| Resize | template<int SRC_T, int ROWS,int COLS,int DROWS,int DCOLS> void Resize ( Mat<ROWS, COLS, SRC_T> &_src, Mat<DROWS, DCOLS, SRC_T> &_dst, int interpolation=HLS_INTER_LINEAR ) |

template<int INTERPOLATION_TYPE, int TYPE, int SRC_ROWS, int SRC_COLS, int DST_ROWS, int DST_COLS, int NPC, int MAX_DOWN_SCALE> void resize (xf::Mat<TYPE, SRC_ROWS, SRC_COLS, NPC> & _src, xf::Mat<TYPE, DST_ROWS, DST_COLS, NPC> & _dst) |