Kria™ KV260 Vision AI Starter Kit Smart Camera Tutorial |

Setting up the Board and Application Deployment |

Setting up the Board and Application Deployment¶

Introduction¶

This document shows how to set up the board and run the smartcam application.

Setting up the Board¶

Note: Skip Step 1, if you have already flashed the SD Card with the KV260 Vision AI Starter Kit Image (kv260-sdcard.img.wic)

Flash the SD Card

Download the SD Card Image and save it on your computer.

Connect the microSD to your computer.

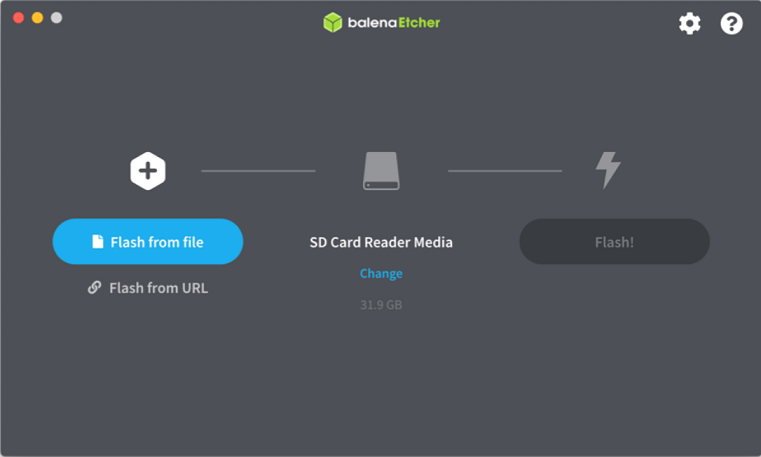

Download the Balena Etcher tool (recommended; available for Window, Linux, and macOS) required to flash the SD card.

Follow the instructions in the tool and select the downloaded image to flash onto your microSD card.

Eject the SD card from your computer.

If you are looking for other OS specific tools to write the image to the SD card refer to KV260 Getting Started Page

Hardware Setup:

microSD:

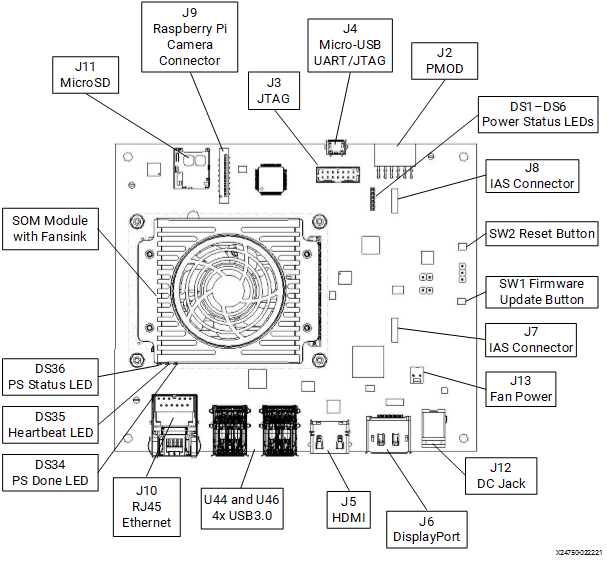

Insert the SD card into slot at J11.

Monitor:

Before booting, connect a 1080P/4K monitor to the board via either DP or HDMI port.

4K monitor is preferred to demonstrate at the maximum supported resolution.

IAS sensor:

Before power on, install an AR1335 sensor module in J7.

UART/JTAG interface:

For interacting and seeing boot-time information, connect a USB debugger to the J4.

You may also use a USB webcam as an input device.

The webcam is optional video input device supported in the application.

Recommended webcam is the Logitech BRIO.

Network connection:

Connect the Ethernet cable to your local network with DHCP enabled to install packages and run Jupyter Notebooks

Audio Pmod setup as RTSP audio input:

Audio Pmod is optional audio input and output device.

In the Smart camera application only RTSP mode uses the audio input function to capture audio. Audio is then sent together with the images as RTSP stream and can be received at the client side.

To set it up, first install the Pmod to J2, then connect a microphone or any other sound input device to the line input port. A headphone with a microphone will not work - device needs to be a dedicated input.

Smartcam application does not yet support speakers.

Software Preparation:

You will use a PC having network access to the board as the RTSP client machine.

Make sure that the PC and the KV260 Vision AI Starter Kit are on the same subnet mask.

On the client machine, to receive and play the RTSP stream, we recommend to install FFplay which is part of FFmpeg package.

For Linux, you can install FFmpeg with the package manager of your distribution.

For Windows, you can find install instructions on https://ffmpeg.org/download.html

Other than FFplay, VLC can also be used to play RTSP stream, but sometimes it doesn’t work on some client machines, while the FFplay works well.

Power on the board, and boot the Linux image.

The Linux image will boot into the following login prompt:

xilinx-k26-starterkit-2020_2 login:Use the

petalinuxuser for login. You will be prompted to set a new password on the first login.xilinx-k26-starterkit-2020_2 login: petalinux You are required to change your password immediately (administrator enforced) New password: Retype new password:

The

petalinuxuser does not have root privileges. Most commands used in subsequent tutorials have to be run usingsudoand you may be prompted to enter your password.Note: The root user is disabled by default due to security reasons. If you want to login as root user, follow the below steps, else, ignore this step and continue. Use the petalinux user’s password on the first password prompt, then set a new password for the root user. You can now login as root user using the newly set root user password.

xilinx-k26-starterkit-2020_2:~$ sudo su -l root We trust you have received the usual lecture from the local System Administrator. It usually boils down to these three things: #1) Respect the privacy of others. #2) Think before you type. #3) With great power comes great responsibility. Password: root@xilinx-k26-starterkit-2020_2:~# passwd New password: Retype new password: passwd: password updated successfullyGet the latest application package.

Check the package feed for new updates.

sudo dnf update

Confirm with “Y” when prompted to install new or updated packages.

Sometimes it is needed to clean the local dnf cache first. To do so, run:

sudo dnf clean all

Get the list of available packages in the feed.

sudo xmutil getpkgs

Install the package with dnf install:

sudo dnf install packagegroup-kv260-smartcam.noarch

System will ask “Is this ok [y/N]:” to download the packages, please type “y” to proceed.

Note: For setups without access to the internet, it is possible to download and use the package locally. Please refer to the Install from a local package feed for instructions.

Dynamically load the application package.

The firmware consist of bitstream, device tree overlay (dtbo) and xclbin file. The firmware is loaded dynamically on user request once Linux is fully booted. The xmutil utility can be used for that purpose.

Show the list and status of available acceleration platforms and AI Applications:

sudo xmutil listapps

Switch to a different platform for different AI Application:

When xmutil listapps shows that there’s no active accelerator, just activate kv260-smartcam.

sudo xmutil loadapp kv260-smartcam

When there’s already another accelerator being activated apart from kv260-smartcam, unload it first, then switch to kv260-smartcam.

sudo xmutil unloadapp sudo xmutil loadapp kv260-smartcam

Getting demo video files suitable for the application:

To be able to demonstrate the function of the application in case you have no MIPI and USB camera in hand, we support the file video source too.

You can download video files from the following links, which is of MP4 format.

Then, you need to transcode it to H264 file which is one supported input format.

ffmpeg -i input-video.mp4 -c:v libx264 -pix_fmt nv12 -vf scale=1920:1080 -r 30 output.nv12.h264

Finally, please upload or copy these transcoded H264 files to the board (by using scp, ftp, or copy onto SD card and finding them in /media/sd-mmcblk0p1/), place it to somewhere under /home/petalinux, which is the home directory of the user you login as.

Run the Application¶

There are two ways to interact with the application.

Jupyter notebook¶

The system will auto start a Jupyter server at

/home/petalinux/notebooks, with userpetalinux.User need to run following command to install the package shipped notebooks which reside in

/opt/xilinx/share/notebooks/smartcamto the folder/home/petalinux/notebooks/smartcam.$ smartcam-install.pyThis script also provides more options to install the notebook of current application to specified location.

usage: smartcam-install [-h] [-d DIR] [-f]

Script to copy smartcam Jupyter notebook to user directory

optional arguments:

-h, --help show this help message and exit

-d DIR, --dir DIR Install the Jupyter notebook to the specified directory.

-f, --force Force to install the Jupyter notebook even if the destination directory exists.

Please get the list of running Jupyter servers with command as user

petalinux:$ jupyter-server listOutput example:

Currently running servers:

http://ip:port/?token=xxxxxxxxxxxxxxxxxx:: /home/petalinux/notebooksStop the currently running server with command:

$ jupyter-server stop 8888To launch Jupyter notebook on the target, run below command.

python3 /usr/bin/jupyter-lab --no-browser --notebook-dir=/home/petalinux/notebooks --ip=ip-address &

// fill in ip-address from ifconfig

Output example:

[I 2021-08-02 15:54:31.141 LabApp] JupyterLab extension loaded from /usr/lib/python3.8/site-packages/jupyterlab

[I 2021-08-02 15:54:31.141 LabApp] JupyterLab application directory is /usr/share/jupyter/lab

[I 2021-08-02 15:54:31.164 ServerApp] jupyterlab | extension was successfully loaded.

[I 2021-08-02 15:54:31.166 ServerApp] Serving notebooks from local directory: /home/petalinux/notebooks

[I 2021-08-02 15:54:31.166 ServerApp] Jupyter Server 1.2.1 is running at:

[I 2021-08-02 15:54:31.166 ServerApp] http://192.168.3.123:8888/lab?token=9f7a9cd1477e8f8226d62bc026c85df23868a1d9860eb5d5

[I 2021-08-02 15:54:31.166 ServerApp] or http://127.0.0.1:8888/lab?token=9f7a9cd1477e8f8226d62bc026c85df23868a1d9860eb5d5

[I 2021-08-02 15:54:31.167 ServerApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation).

[C 2021-08-02 15:54:31.186 ServerApp]

To access the server, open this file in a browser:

file:///home/petalinux/.local/share/jupyter/runtime/jpserver-1119-open.html

Or copy and paste one of these URLs:

http://192.168.3.123:8888/lab?token=9f7a9cd1477e8f8226d62bc026c85df23868a1d9860eb5d5

or http://127.0.0.1:8888/lab?token=9f7a9cd1477e8f8226d62bc026c85df23868a1d9860eb5d5

User can access the server by opening the server URL from previous steps with the Chrome browser.

In the notebook, we will construct the GStreamer pipeline string, you can get it by adding simple python code to print it out, and played with gst-launch-1.0 command in the console, and there are some user options variables that can be changed and run with. For other parts of the pipeline, you can also change and play to see the effect easily.

Command line¶

These allow the user to define different video input and output device targets using the “smartcam” application. These are to be executed using the UART/debug interface.

Notice The application need to be ran with sudo .

Example scripts¶

Example scripts and options definitions are provided below.

Refer to File Structure to find the files location.

Click here to view the example script usage

MIPI RTSP server:

Invoking

"sudo 01.mipi-rtsp.sh"will start rtsp server for mipi captured images.Script accepts ${width} ${height} as the 1st and 2nd parameter, the default is 1920 x 1080.

Running the script will give message in the form:

stream ready at:

rtsp://boardip:port/test

Run "ffplay rtsp://boardip:port/test" on the client PC to receive the rtsp stream.

4. Checking:

You should be able to see the images the camera is capturing on the ffplay window, and when there's face captured by camera, there should be blue box drawn around the face, and the box should follow the movement of the face.

MIPI DP display:

Make sure the monitor is connected as here.

Invoking

"sudo 02.mipi-dp.sh"will play the captured video with detection results on monitor.Script accepts ${width} ${height} as the 1st and 2nd parameter, the default is 1920 x 1080.

Checking:

You should be able to see the images the camera is capturing on the monitor connected to the board, and when there’s face captured by the camera, there should be blue box drawn around the face, and the box should follow the movement of the face.

File to File

Invoking

"sudo 03.file-file.sh"Take the first argument passed to this script as the path to the H264 video file (you can use the demo video for face detection, or similar videos), perform face detection and generate video with detection bbox, save as

./out.h264Checking:

Play the input video file and generated video file “./out.h264” with any media player you prefer, e.g. VLC, FFPlay. You should be able to see in the output video file, there are blue boxes around the faces of people, and the boxes should follow the movement of the faces, while there’re no such boxes with the input video file.

File to DP

Invoking

"sudo 04.file-ssd-dp.sh"Take the first argument passed to this script as the path to the H264 video file (you can use the demo video for ADAS SSD, or similar videos), perform vehicles detection and generate video with detection bbox, and display onto monitor

Checking:

You should be able to see a video of highway driving, with the detection of vehicles in a bounding box.

Additional configuration options for smartcam invocation¶

The example scripts and Jupyter notebook work as examples to show the capability of the smartcam for specific configurations. More combinations could be made based on the options provided by smartcam. User can get detailed application options as following by invoking smartcam --help.

Usage¶

smartcam [OPTION?] - Application for face detection on SOM board of Xilinx.

Help Options:

-h, --help Show help options

--help-all Show all help options

--help-gst Show GStreamer Options

Application Options:

-m, --mipi= use MIPI camera as input source, auto detect, fail if no mipi available.

-u, --usb=media_ID usb camera video device id, e.g. 2 for /dev/video2

-f, --file=file path location of h26x file as input

-i, --infile-type=h264 input file type: [h264 | h265]

-W, --width=1920 resolution w of the input

-H, --height=1080 resolution h of the input

-r, --framerate=30 framerate of the input

-t, --target=dp [dp|rtsp|file]

-o, --outmedia-type=h264 output file type: [h264 | h265]

-p, --port=5000 Port to listen on (default: 5000)

-a, --aitask select AI task to be run: [facedetect|ssd|refinedet]

-n, --nodet no AI inference

-A, --audio RTSP with I2S audio input

-R, --report report fps

-s, --screenfps display fps on screen, notic this will cause perfermance degradation.

--ROI-off turn off ROI (Region-of-Interest)

--control-rate=low-latency Encoder parameter control-rate

Supported value:

((0): disable (1): variable (2): constant

(2130706434): capped-variable

(2130706433): low-latency)

--target-bitrate=3000 Encoder parameter target-bitrate

--gop-length=60 Encoder parameter gop-length

--profile Encoder parameter profile.

Default: h264: constrained-baseline; h264: main

Supported value:

(H264: constrained-baseline, baseline, main, high, high-10, high-4:2:2, high-10-intra, high-4:2:2-intra

H265: main, main-intra, main-10, main-10-intra, main-422-10, main-422-10-intra)

--level Encoder parameter level

Default: 4

Supported value:

(4, 4.1, 5, 5.1, 5.2)

--tier Encoder parameter tier

Default: main

Supported value:

(main, high)

--encodeEnhancedParam String for fully customizing the encoder in the form "param1=val1, param2=val2,...", where paramn is the name of the encoder parameter

For detailed info about the parameter name and value range, just run gst-inspect-1.0 omxh264enc / gst-inspect-1.0 omxh265enc based on encoding type selected by option "--outmedia-type", the parameter could be any of the listed parameters except "control-rate, target-bitrate, gop-length" which have dedicated options as above.

Examples of supported combinations sorted by input are outlined below¶

If using the command line to invoke the smartcam, stop the process via CTRL-C prior to starting the next instance.

MIPI Input (IAS sensor input):

output: RTSP

sudo smartcam --mipi -W 1920 -H 1080 --target rtsp

-

sudo smartcam –mipi -W 1920 -H 1080 –target rtsp –audio

output: DP

sudo smartcam --mipi -W 1920 -H 1080 --target dp

output: file

sudo smartcam --mipi -W 1920 -H 1080 --target file

Note Output file is “./out.h264”

input file (file on file system):

Note You must update the command to the specific file desired as the input source.

output: RTSP

sudo smartcam --file ./test.h264 -i h264 -W 1920 -H 1080 -r 30 --target rtsp

output: DP

sudo smartcam --file ./test.h264 -i h264 -W 1920 -H 1080 -r 30 --target dp

output: file

sudo smartcam --file ./test.h264 -i h264 -W 1920 -H 1080 -r 30 --target file

Note Output file is “./out.h264”

input USB (USB webcam):

Note You must ensure the width/height/framerate defined are supported by your USB camera.

output: RTSP

sudo smartcam –usb 1 -W 1920 -H 1080 -r 30 –target rtsp

output: DP

sudo smartcam –usb 1 -W 1920 -H 1080 -r 30 –target dp

output: file

sudo smartcam –usb 1 -W 1920 -H 1080 -r 30 –target file

Note Output file is “./out.h264”

Files structure of the application¶

The application is installed as:

Binary File Directory: /opt/xilinx/bin

| filename | description |

|---|---|

| smartcam | main app |

| filename | description |

|---|---|

| 01.mipi-rtsp.sh | call smartcam to run facedetction and send out rtsp stream. |

| 02.mipi-dp.sh | call smartcam to run facedetction and display on DP display. |

| 03.file-file.sh | call smartcam to run facedetction and display on input h264/5 file and generate output h264/5 with detection boxes. |

Configuration File Directory: /opt/xilinx/share/ivas/smartcam/${AITASK}

AITASK = “facedetect” | “refinedet” | “ssd”

| filename | description |

|---|---|

| preprocess.json | Config of preprocess for AI inference |

| aiinference.json | Config of AI inference (facedetect|refinedet|ssd) |

| drawresult.json | Config of boundbox drawing |

Jupyter notebook file: => /opt/xilinx/share/notebooks/smartcam

| filename | description |

|---|---|

| smartcam.ipynb | Jupyter notebook file for MIPI/USB --> DP/RTSP demo. |

Next Steps¶

Go back to the KV260 SOM Smart camera design start page

License¶

Licensed under the Apache License, Version 2.0 (the “License”); you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an “AS IS” BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

Copyright© 2021 Xilinx