Attention

This product has reached its End Of Life. All new designs should migrate to MA35D.

Using FFmpeg¶

This page documents how to use FFmpeg with the Xilinx Video SDK.

Introduction¶

FFmpeg is an industry standard, open source, widely used utility for handling video. FFmpeg has many capabilities, including encoding and decoding all video compression formats, encoding and decoding audio, encapsulating, and extracting audio, and video from transport streams, and many more. The Xilinx Video SDK includes an enhanced version of FFmpeg that can communicate with the hardware accelerated transcode pipeline in Xilinx devices.

It is not within the scope of this document to provide an exhaustive guide on the usage of FFmpeg. Various resources can be found online, for example:

The following sections describe the options used with FFmpeg to configure the various hardware accelerators available on Xilinx devices.

Example Commands¶

A simple FFmpeg command for accelerated encoding with the Xilinx Video SDK will look similar to this one:

ffmpeg -c:v mpsoc_vcu_h264 -i infile.mp4 -c:v mpsoc_vcu_hevc -s:v 1920x1080 -b:v 1000K -r 60 -f mp4 -y transcoded.mp4

There are many other ways in which FFmpeg can be used to leverage the video transcoding features of Xilinx devices. Examples illustrating how to run FFmpeg for encoding, decoding, and transcoding with and without ABR scaling. To see examples of all these possibilities, refer to the FFmpeg tutorials included in this repository.

General FFmpeg Options¶

Options |

Descriptions |

|---|---|

|

The input file.

|

|

Specify the video codec.

This option must be set for any video stream sent to a Xilinx device.

Valid values are

mpsoc_vcu_hevc (for HEVC) or mpsoc_vcu_h264 (for H.264) |

|

The frame size (WxH). For example 1920x1080 or 3840x2160.

|

|

The container format.

|

|

The frame rate in fps (Hz).

|

|

Used to specify ABR scaling options. Consult the section about Using the Xilinx Multiscale Filter for more details on how to use this option.

|

|

Global option used to specify on which Xilinx device the FFmpeg job should run. Consult the Using Explicit Device IDs section for more details on how to use this option.

Valid values are positive integers. Default is device 0.

|

|

Component-level option used to specify on which Xilinx device a specific component of the FFmpeg job should be run. When set, it overwrites the global

-xlnx_hwdev option for that component. Consult the Using Explicit Device IDs section for more details on how to use this option.Valid values are positive integers. Default is device 0.

|

|

Log latency information to syslog.

Valid values: 0 (disabled, default) and 1 (enabled)

|

|

Configures the FFmpeg log level.

Setting this option to

debug displays comprehensive debug information about the job |

Video Decoding¶

For the complete list of features and capabilities of the Xilinx hardware decoder, refer to the Video Codec Unit section of the Specs and Features chapter of the documentation.

The Xilinx video decoder is leveraged in FFmpeg by setting the -c:v option to mpsoc_vcu_hevc for HEVC or to mpsoc_vcu_h264 for H.264.

The table below describes all the options for the Xilinx video decoder.

Options |

Descriptions |

|---|---|

|

Enable low-latency mode

Valid values: 0 (default) and 1

Setting this flag to 1 reduces decoding latency when

splitbuff-mode is also enabled. IMPORTANT: This option should not be used with streams containing B frames. |

|

Configure decoder in split/unsplit input buffer mode

Valid values: 0 (default) and 1

The split buffer mode hands-off buffers to next pipeline stage earlier. Enabling both

splitbuff-mode and low-latency reduces decoding latency. |

|

Number of internal entropy buffers

Valid values: 2 (default) to 10

Can be used to improve the performance for input streams with a high bitrate (including 4k streams) or a high number of reference frames. 2 is enough for most cases. 5 is the practical limit.

|

Video Encoding¶

For the complete list of features and capabilities of the Xilinx hardware encoder, refer to the Video Codec Unit section of the Specs and Features chapter of the documentation.

The Xilinx video encoder is leveraged in FFmpeg by setting the -c:v option to mpsoc_vcu_hevc for HEVC or to mpsoc_vcu_h264 for H.264.

The table below describes all the options for the Xilinx video encoder.

Options |

Descriptions |

|---|---|

|

Specify the video bitrate

You can specify this in Mb or Kb. For example -b:v 1M or -b:v 1000K.

Can be specified in Mb or Kb. For example -b:v 1M or -b:v 1000K

The bitrate can also be adjusted dynamically. Consult the Dynamic Encoder Parameters section for more details on how to change this option during runtime.

|

|

Maximum bitrate

Valid values: 0 to 3.5e+10 (default 5e+06)

You may want to use this to limit encoding bitrate if you have not specified a

-b:v bitrate |

|

GOP size

Set the GOP size to 2x frame rate for a 2 second GOP

The maximum supported GOP size is 1000.

|

|

Aspect ratio

Valid values: 0 to 3 (default 0)

(0) auto - 4:3 for SD video, 16:9 for HD video, unspecified for unknown format

(1) 4:3

(2) 16:9

(3) none - Aspect ratio information is not present in the stream

|

|

Number of encoder cores in the Xilinx device to utilize

Valid values: 0 to 4 (default 0)

(0) auto

The FFmpeg encoder plugin automatically determines how many encoder cores are needed to sustain real-time performance (e.g. 1 for 1080p60, 4 for 4K60). The

-cores option can be used to manually specify how many encoder cores are to be used for a given job. When encoding file-based clips with a resolution of 1080p60 or lower, leveraging additional cores may increase performance. This option will provide diminishing returns when multiple streams are processed on the same device. This option has no impact on live streaming use-cases as a video stream cannot be processed faster than it is received. |

|

Number of slices to operate on at once within a core

Valid values: 1 to 68 (default 1)

Slices are a fundamental part of the stream format. You can operate on slices in parallel to increase speed at which a stream is processed. However, operating on multiple “slices” of video at once will have a negative impact on video quality. This option must be used when encoding 4k streams to H.264 in order to sustain real-time performance. The maximum practical value for this option is 4 since there are 4 encoder cores in a device.

|

|

Encoding level restriction

If the user does not set this value, the encoder will automatically assign appropriate level based on resolution, frame rate and bitrate

Valid values for H.264: 1, 1.1, 1.2, 1.3, 2, 2.1, 2.2, 3, 3.1, 3.2, 4, 4.1, 4.2, 5, 5.1, 5.2

Valid values for HEVC: 1, 2, 2.1, 3, 3.1, 4, 4.1, 5, 5.1, 5.2

|

|

Set the encoding profile

Valid values for H.264: baseline (66), main (77), high (100, default), high-10 (110), high-10-intra (2158)

Valid values for HEVC: main (0, default), main-intra (1), main-10 (2), main-10-intra (3)

|

|

Set the encoding tier (HEVC only)

Valid values: 0 to 1 (default is 0)

(0) main - Main tier

(1) high - High tier

|

|

Number of B frames

Valid values: 0 to 4 (default is 2)

For tuning use 1 or 2 to improve video quality at the cost of latency. Consult the B Frames section for more details on how to use this option.

|

|

Number of frames to lookahead for qp maps

Valid values: 0 (default) to 20

For tuning set this to 20 to improve subjective video quality at the cost of latency. Consult the Lookahead section for more details on how to use this option.

|

|

Enable spatial AQ

Valid values: 0 or 1 (default)

(0) disable

(1) enable - Default

Consult the Adaptive Quantization section for more details on how to use this option.

|

|

Percentage of spatial AQ gain.

Valid values: 0 to 100 (default 50)

Consult the Adaptive Quantization section for more details on how to use this option.

|

|

Enable temporal AQ

Valid values: 0 or 1 (default)

(0) disable

(1) enable - Default

Consult the Adaptive Quantization section for more details on how to use this option.

|

|

Determine if the quantization values are auto scaled

Valid values: 0, 1 (default)

(0) flat - Flat scaling list mode, improves objective metrics

(1) default - Default scaling list mode, improves video quality

Consult the Scaling List section for more details on how to use this option.

|

|

QP control mode

Valid values: 0 to 2 (default 1)

(0) uniform

(1) auto - default

(2) relative_load

For best objective scores use

uniform. For best subjective quality use auto or relative_load. Consult the Adaptive Quantization section for more details on how to use this option. |

|

Set the Rate Control mode

Valid values: 0 to 3 (default is 1)

(0) Constant QP

(1) Constant Bitrate - default

(2) Variable Bitrate

(3) Low Latency

|

|

Minimum QP value allowed for rate control

Valid values: 0 to 51 (default 0)

This option has no effect when

-control-rate is set to Constant QP (0). |

|

Maximum QP value allowed for rate control

Valid values: 0 to 51 (default 51)

This option has no effect when

-control-rate is set to Constant QP (0). |

|

Slice QP

Valid values: -1 to 51 (default -1)

(-1) auto

This sets the QP values when

-control-rate is Constant QP (0). The specified QP value applies to all the slices. This parameter can also be used to provide QP for the first Intra frame when -lookahead_depth = 0. When set to -1, the QP for first Intra frame is internally calculated. |

|

IDR frame frequency

Valid values: -1 to INT_MAX32 (default -1)

If this option is not specified, a closed GOP is generated: the IDR periodicity is aligned with the GOP size and IDR frame is inserted at the start of each GOP. To insert IDR frames less frequently, use a value which is a multiple of the GOP size.

|

|

Force insertion of IDR frames

Valid values: time[,time…] or expr:expr

Force an IDR frame to be inserted at the specified frame number(s). Consult the Dynamic IDR Frame Insertion section for more details on how to use this option.

|

|

Set advanced encoding options

Valid values: dynamic-params=<options file>

Consult the Dynamic Encoder Parameters section for more details on how to use this option.

|

|

Enable tuning video quality for objective metrics

Valid value: 0, 1 (default 0)

(0) disable - Disable tune metrics

(1) enable - Enable tune metrics

Enabling

-tune-metrics automatically forces -qp-mode = uniform and -scaling-list = flat, overwritting any explicit user settings of two values. This option improves objective quality by providing equal importance to all the blocks in the frame: the same quantization parameters and transform coefficients are used for all of them.This option should be disabled when measuring subjective quality or visually checking the video.

This option should be enabled when measuring objective metrics such as PSNR/SSIM/VMAF.

|

|

Add a vsync frame

Valid values: 0, 1

Set this to 0 to prevent extra frames being added.

|

Video Scaling¶

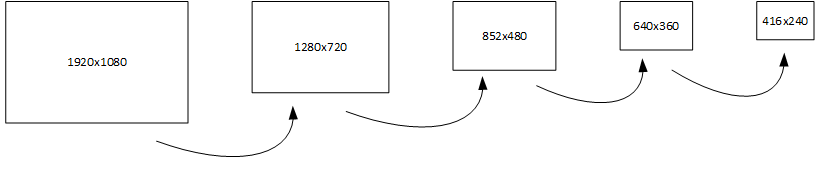

The figure below illustrates a scaling ladder with a 1920x1080 input and 4 outputs with resolutions of 1280x720, 852x480, 640x360, and 416x240, respectively.

Scaling ladder: the output from one rung is passed to the next for further scaling¶

IMPORTANT: The scaler is tuned for downscaling and expects non-increasing resolutions in an ABR ladder. Increasing resolutions between outputs is supported but will reduce video quality. For best results, the outputs must be configured in descending order. The frame rate and resolution of a given output should be smaller or equal than the rate of the previous output. Since the output of one scaling stage is passed as an input to the next, video quality will be negatively affected if frame rate is increased after it has been lowered.

For the complete list of features and capabilities of the Xilinx hardware scaler, refer to the Adaptive Bitrate Scaler features section of the Specs and Features chapter of the documentation.

The Xilinx hardware scaler is leveraged in FFmpeg by using the multiscale_xma complex filter and the FFmpeg filter graph syntax. This section describes the options of the multiscale_xma complex filter.

- multiscale_xma¶

Filter implementing the Xilinx ABR multiscaler. Takes one input and up to 8 output streams. The complete list of options is described below.

Options |

Description |

|---|---|

|

Specify the number of scaler outputs

Valid values are integers between 1 and 8

|

|

Specify the width of each of the scaler outputs

The output number {N} must be an integer value between 1 and 8, and must not exceed the number of outputs specified with

outputsValid values are integers between 3840 and 128, in multiples of 4

|

|

Specify the height of each of the scaler outputs

The output number {N} must be an integer value between 1 and 8, and must not exceed the number of outputs specified with

outputsValid values are integers between 2160 and 128, in multiples of 4

|

|

Specify the frame rate of each of the scaler outputs

By default, the scaler uses the input stream frame rate for all outputs. While the encoder supports frame dropping with the -r option, there is also hardware support in the scaler for dropping frames. Dropping frames in the scaler is preferred since it saves scaler bandwidth, allowing the scaler and encoder to operate more efficiently.

The output number {N} must be an integer value between 1 and 8, and must not exceed the number of outputs specified with

outputsValid values:

full and half (default full). The first output has to be at full rate (out_1_rate=full). |

|

Specify the ID of the device on which the scaler should be executed

Valid values: integers from -1 to INT_MAX (default -1)

This option is primarily used for multi-device use cases. When set, it overwrites the global

-xlnx_hwdev option. Consult the Using Explicit Device IDs section for more details on how to use this option. |

|

Enable pipelining in multiscaler

Pipelining provides additional performance at the cost of additional latency (2 frames). By default, pipelining is automatically controlled based on where the scaler input is coming from. If the input is coming from the host, pipelining is enabled. If the input is coming from the decoder then pipelining is disabled. Explicitly enabling pipelining has benefits in two situations: in a 2-device use cases where the output of the scaler is transfered to the host; in zero copy 4K ABR ladder use cases with multiple renditions.

Valid values: -1 to 1 (default -1)

(-1) auto

(0) disabled

(1) enabled

|

Using the Multiscale Filter¶

The filter graph specification for the multiscale_xma filter should be constructed in the following way:

Add the

multiscale_xmafilter to the graphSet the number of scaler outputs

Set the width, height, and rate settings for each scaler output

Define the name each scaler output

If the outputs are not to encoded on the device, add

xvbm_convertfilters to the filter graph to copy the frames back to the host and convert them to AV frames.

The following example shows a complete command to decode, scale and encode to five different resolutions:

ffmpeg -c:v mpsoc_vcu_h264 -i input.mp4 \

-filter_complex " \

multiscale_xma=outputs=4: \

out_1_width=1280: out_1_height=720: out_1_rate=full: \

out_2_width=848: out_2_height=480: out_2_rate=half: \

out_3_width=640: out_3_height=360: out_3_rate=half: \

out_4_width=288: out_4_height=160: out_4_rate=half \

[a][b][c][d]; [a]split[aa][ab]; [ab]fps=30[abb]" \

-map "[aa]" -b:v 4M -c:v mpsoc_vcu_h264 -f mp4 -y ./scaled_720p60.mp4 \

-map "[abb]" -b:v 3M -c:v mpsoc_vcu_h264 -f mp4 -y ./scaled_720p30.mp4 \

-map "[b]" -b:v 2500K -c:v mpsoc_vcu_h264 -f mp4 -y ./scaled_480p30.mp4 \

-map "[c]" -b:v 1250K -c:v mpsoc_vcu_h264 -f mp4 -y ./scaled_360p30.mp4 \

-map "[d]" -b:v 625K -c:v mpsoc_vcu_h264 -f mp4 -y ./scaled_288p30.mp4

This example can also be found in the FFmpeg introductory tutorials: Transcode With Multiple-Resolution Outputs.

Encoding Scaler Outputs¶

The outputs of an ABR ladder can be encoded on the device using either the mpsoc_vcu_h264 or the mpsoc_vcu_hevc codec.

All outputs must be encoded using the same codec.

Using Raw Scaler Outputs¶

To return raw video outputs from the ABR ladder, use the xvbm_convert filter to copy the frames from the device to the host and convert them to AV frames. The converted AV frames can then be used in FFmpeg software filters or directly saved to file as shown in this command:

ffmpeg -c:v mpsoc_vcu_h264 -i input.mp4 \

-filter_complex " \

multiscale_xma=outputs=4: \

out_1_width=1280: out_1_height=720: out_1_rate=full: \

out_2_width=848: out_2_height=480: out_2_rate=half: \

out_3_width=640: out_3_height=360: out_3_rate=half: \

out_4_width=288: out_4_height=160: out_4_rate=half \

[a][b][c][d]; [a]split[aa][ab]; [ab]fps=30[abb]; \

[aa]xvbm_convert[aa1];[abb]xvbm_convert[abb1];[b]xvbm_convert[b1];[c]xvbm_convert[c1]; \

[d]xvbm_convert[d1]" \

-map "[aa1]" -pix_fmt yuv420p -f rawvideo ./scaled_720p60.yuv \

-map "[abb1]" -pix_fmt yuv420p -f rawvideo ./scaled_720p30.yuv \

-map "[b1]" -pix_fmt yuv420p -f rawvideo ./scaled_480p30.yuv \

-map "[c1]" -pix_fmt yuv420p -f rawvideo ./scaled_360p30.yuv \

-map "[d1]" -pix_fmt yuv420p -f rawvideo ./scaled_288p30.yuv

This example can also be found in the FFmpeg introductory tutorials: Decode Only Into Multiple-Resolution Outputs.

Scaling and Encoding on Two Different Devices¶

The Xilinx Video SDK supports up to 32 scaled outputs streams per device, up to a maximum total equivalent bandwidth of 4kp60. For some use cases, such as 4K ladders or 1080p ladders many outputs, it may not be possible to scale or encode all streams on a single device. In this situation, it is possible to split the job across two devices and run part of job on one device and the other part on another device. This accomplished by using the -lxlnx_hwdev option which allows specifying the device on which a specific job component (decoder, scaler, encoder) should be run.

Consult the Using Explicit Device IDs section for more details on how to use the -lxlnx_hwdev option and work with multiple devices.

Performance Considerations¶

Encoded input streams with a high bitrate or with a high number of reference frames can degrade the performance of an ABR ladder. The -entropy_buffers_count decoder option can be used to help with this. A value of 2 is enough for most cases, 5 is the practical limit.

Considerations for 4K Streams¶

The Xilinx Video SDK solution supports real-time decoding and encoding of 4k streams with the following notes:

The Xilinx video pipeline is optimized for live-streaming use cases. For 4k streams with bitrates significantly higher than the ones typically used for live streaming, it may not be possible to sustain real-time performance.

Transcode pipelines split across two devices and decoding a 4K60 H.264 10-bit input stream will not perform at real-time speed.

4K60 encode-only and decode-only use cases involve significant transfers of raw data between the host and the device. This may impact the overall performance.

When decoding 4k streams with a high bitrate, increasing the number of entropy buffers using the

-entropy_buffers_countoption can help improve performanceWhen encoding raw video to 4k, set the

-soption to3840x2160to specify the desired resolution.When encoding 4k streams to H.264, the

-slicesoption is required to sustain real-time performance. A value of 4 is recommended. This option is not required when encoding to HEVC.

Moving Data through the Video Pipeline¶

Automatic Data Movement¶

The Xilinx Video SDK takes care of moving data efficiently through the FFmpeg pipeline in these situations:

Individual operations:

Decoder input: encoded video is automatically sent from the host to the device

Scaler input: raw video is automatically sent from the host to the device

Encoder input: raw video is automatically sent from the host to the device

Encoder output: encoded video is automatically sent from the device to the host

Multistage pipelines

Pipelines with hardware accelerators only (such as transcoding with ABR ladder): the video frames remain on the device and are passed from one accelerator to the next, thereby avoiding unnecessary data movement between the host and the device

Pipelines with software filters: when using the ouput of the decoder or the scaler with a FFmpeg software filter, the video frames are automatically copied back to the host, as long as the software filter performs frame cloning. Examples of such filters include

fpsandsplit.

Explicit Data Movement¶

It is necessary to explicitly copy video frames from the device to the host in these situations:

Writing the output of the decoder or the scaler to file.

Using the output of the decoder or the scaler with a FFmpeg software filter which does not perform frame cloning.

Performing different operations on different devices, in which case the video frames must be copied from the first device to the host and then from the host to the second device.

This is done using the xvbm_convert filter.

IMPORTANT: Failing to use the xvbm_convert filter will result in garbage data being propagated throught the processing pipeline.

- xvbm_convert¶

FFmpeg filter which converts and copies a XVBM frame on the device to an AV frame on the host. The pixel format of the frame on the host depends on the pixel format of the frame on the device. If the frame on the device is 8-bit, the frame will be stored as nv12 on the host. If the frame on the device is 10-bit, the frame will be stored as xv15 on the host. The

xvbm_convertfilter does not support other formats and does not support converting 8-bit frames to 10-bit frames.

Examples using the xvbm_convert filter can be found here:

FFmpeg tutorials Decode Only and Decode Only Into Multiple-Resolution Outputs

FFmpeg examples with Software Filters

Working with Multiple Devices¶

By default (if no device identifier is specified) a job is submitted to device 0. When running large jobs or multiple jobs in parallel, device 0 is bound to run out of resources rapidly and additional jobs will error out due to insufficient resources.

By using the -lxlnx_hwdev and -xlnx_hwdev options, the different components (decoder, scaler, encoder) of a job can be individually submitted to a specific device. This makes it easy and straightforward to leverage the entire video acceleration capacity of your system, regardless of the number of cards and devices.

Consult the Using Explicit Device IDs section for more details on how to work with multiple devices.

Mapping Audio Streams¶

When the FFmpeg job has a single input and a single output, the audio stream of the input is automatically mapped to the output video.

When the FFmpeg job has multiple outputs, FFmpeg must be explicitely told which audio stream to map to each of the output streams. The example below implements an transcoding pipeline with an ABR ladder. The input audio stream is split into 4 different channels using the asplit filter, one for each video output. Each audio channel is then uniquely mapped to one of the output video streams using the -map option.

ffmpeg -c:v mpsoc_vcu_h264 -i input.mp4

-filter_complex "multiscale_xma=outputs=4:

out_1_width=1280: out_1_height=720: out_1_rate=full:

out_2_width=848: out_2_height=480: out_2_rate=full:

out_3_width=640: out_3_height=360: out_3_rate=full:

out_4_width=288: out_4_height=160: out_4_rate=full

[vid1][vid2][vid3][vid4]; [0:1]asplit=outputs=4[aud1][aud2][aud3][aud4]" \

-map "[vid1]" -b:v 3M -c:v mpsoc_vcu_h264 -map "[aud1]" -c:a aac -f mp4 -y output1.mp4

-map "[vid2]" -b:v 2500K -c:v mpsoc_vcu_h264 -map "[aud2]" -c:a aac -f mp4 -y output2.mp4

-map "[vid3]" -b:v 1250K -c:v mpsoc_vcu_h264 -map "[aud3]" -c:a aac -f mp4 -y output3.mp4

-map "[vid4]" -b:v 625K -c:v mpsoc_vcu_h264 -map "[aud4]" -c:a aac -f mp4 -y output4.mp4

Rebuilding FFmpeg¶

There are two methods for rebuilding FFmpeg with the Xilinx Video SDK plugins enabled:

Using the complete source code

Using the git patch file

Using the Source Code¶

The sources/app-ffmpeg4-xma submodule contains the entire source code for the FFmpeg executable included with the Video SDK. This is a fork of the main FFmpeg GitHub (release 4.4, tag n4.4, commid ID dc91b913b6260e85e1304c74ff7bb3c22a8c9fb1) with a Xilinx patch applied to enable the Xilinx Video SDK plugins. Due to licensing restrictions, the FFmpeg executable included in the Video SDK is enabled with the Xilinx Video SDK plugins only.

You can rebuild the FFmpeg executable with optional plugins by following the instructions below. Additionally, comprehensive instructions for compiling FFmpeg can be found on the FFmpeg wiki page.

Make sure

nasmandyasmare installed on your machine.Navigate the top of the Xilinx Video SDK repository:

cd /path/to/video-sdk

Make sure the sources have been downloaded from the repository:

git submodule update --init --recursive

Navigate to the directory containing the FFmpeg sources:

cd sources/app-ffmpeg4-xma

Optionally install FFmpeg plugins you wish to enable (either from source or from your package manager like

yumorapt). For example: libx264, or libx265.Configure FFmpeg with

--enableflags to enable the desired plugins. The-enable-libxma2apiflag enables the Xilinx Video SDK plugins. The command below will configure the Makefile to install the custom FFmpeg in the/tmp/ffmpegdirectory. To install in another location, modify the--prefixand--datadiroptions:./configure --prefix=/tmp/ffmpeg --datadir=/tmp/ffmpeg/etc --enable-x86asm --enable-libxma2api --disable-doc --enable-libxvbm --enable-libxrm --extra-cflags=-I/opt/xilinx/xrt/include/xma2 --extra-ldflags=-L/opt/xilinx/xrt/lib --extra-libs=-lxma2api --extra-libs=-lxrt_core --extra-libs=-lxrt_coreutil --extra-libs=-lpthread --extra-libs=-ldl --disable-static --enable-shared

Build and install the FFmpeg executable:

make -j && sudo make install

The

/opt/xilinx/xcdr/setup.shscript puts the Xilinx-provided FFmpeg in thePATHenvironment variable. To use the newly built FFmpeg, update yourPATHor provide the full path to the custom-built executable.

Using the Git Patch File¶

The sources/app-ffmpeg4-xma-patch folder contains a git patch file which can be applied to a FFmpeg fork to enable the Xilinx Video SDK plugins.

This patch is intended to be applied to FFmpeg n4.4. As such, applying this patch to earlier or later versions of FFmpeg may require edits to successfully merge these changes and represent untested configurations.

The patch makes edits to FFmpeg and adds new plugins to FFmpeg to initialize, configure and use Xilinx video accelerators.

The patch can be applied to a FFmpeg fork as follows:

Clone the n4.4 version of FFmpeg:

git clone https://github.com/FFmpeg/FFmpeg.git -b n4.4

After the git clone, you will have a directory named FFmpeg. Enter this directory:

cd FFmpeg

Copy the patch file into the FFmpeg directory:

cp /path/to/sources/app-ffmpeg4-xma-patch/0001-Support-for-Xilinx-U30-SDK-v2-for-FFmpeg-n4.4.patch .

Apply the patch:

git am 0001-Support-for-Xilinx-U30-SDK-v2-for-FFmpeg-n4.4.patch --ignore-whitespace --ignore-space-change

Optionally install FFmpeg plugins you wish to enable (either from source or from your package manager like

yumorapt). For example: libx264, or libx265.Configure FFmpeg with

--enableflags to enable the desired plugins. The-enable-libxma2apiflag enables the Xilinx Video SDK plugins. The command below will configure the Makefile to install the custom FFmpeg in the/tmp/ffmpegdirectory. To install in another location, modify the--prefixand--datadiroptions:./configure --prefix=/tmp/ffmpeg --datadir=/tmp/ffmpeg/etc --enable-x86asm --enable-libxma2api --disable-doc --enable-libxvbm --enable-libxrm --extra-cflags=-I/opt/xilinx/xrt/include/xma2 --extra-ldflags=-L/opt/xilinx/xrt/lib --extra-libs=-lxma2api --extra-libs=-lxrt_core --extra-libs=-lxrt_coreutil --extra-libs=-lpthread --extra-libs=-ldl --disable-static --enable-shared

Build and install the FFmpeg executable:

make -j && sudo make install

The

/opt/xilinx/xcdr/setup.shscript puts the Xilinx-provided FFmpeg in thePATHenvironment variable. To use the newly built FFmpeg, update yourPATHor provide the full path to the custom-built executable.